1. Description[edit | edit source]

1.1. What is ST Edge AI?[edit | edit source]

The ST Edge AI utility provides a complete and unified Command Line Interface (CLI) to generate, from a pre-trained Neural Network (NN), an optimized model or a library for all STM32 devices including MPU, MCU, ISPU and MSC. It consists on three main commands: analyze, generate and validate. Each command can be used, regardless of the other commands using the same set of common options (model files, output directory…), or any specific options.

In the case of the STM32MPU, you can use the generate command in ST Edge AI to convert a Neural Network (NN) model to an optimized Network Binary Graph (NBG). This NBG is the only format that allows you to run an NN model using the STM32MP2x Neural Processing Unit (NPU) acceleration. You can also use the validate command to verify that the model outputs are similar on an STM32MPU platform and on a Linux PC.

| The analyze commands in ST Edge AI for STM32MPUs are currently under development and will be available in a future release. |

1.2. Main features[edit | edit source]

ST Edge AI is delivered as an archive containing an installer that can be executed to install the tool on the computer. This installer offers the possibility to select the ST Edge AI component to install. Some of these components are not available on all operating systems. In the case of the STM32MP2 component, it is available only for Linux.

The tool already contains all the python environment required to run a conversion. The objective is to allow the user to convert and execute a NN model easily on the STM32MP2x platforms. For this, the tool allows the conversion of a quantized TensorFlow™ Lite[1] or ONNX™[2] model into a NBG format.

The Network binary graph (NBG) is the precompiled NN model format using the OpenVX™ graph representation. This is the only NN format that can be loaded and executed directly on the NPU of STM32MP2x boards.

The model provided to the tool must be quantized using the 8-bits per-tensor asymmetric quantization scheme to have the best performances. If the quantization scheme is 8-bits per-channel, the model mainly runs on GPU, instead of NPU.

The tool does not support the custom operators:

If the provided model contains custom operations, these are automatically removed or the generation fails.

If the output of the model is already post-processed using a custom operator such as TFLite post-process, the post-processing layer is deleted. To prevent this situation:

- Provide a model that does not include the custom post-process layer, and code the post-process function inside your applications. The model runs on NPU, and the post-process is executed on CPU.

- Split your model to execute the core of the model on the NPU, using the NBG model and the post-processing layer on the CPU using your TensorFlow™ Lite or ONNX™ model with stai_mpu API.

Once this NBG is generated, you can benchmark or develop your AI application. For further information, refer to the following articles presenting how to deploy your NN model and guide to benchmark your NN model.

2. Installation[edit | edit source]

Download the ST Edge AI tool here: https://www.st.com/en/development-tools/stedgeai-core.html

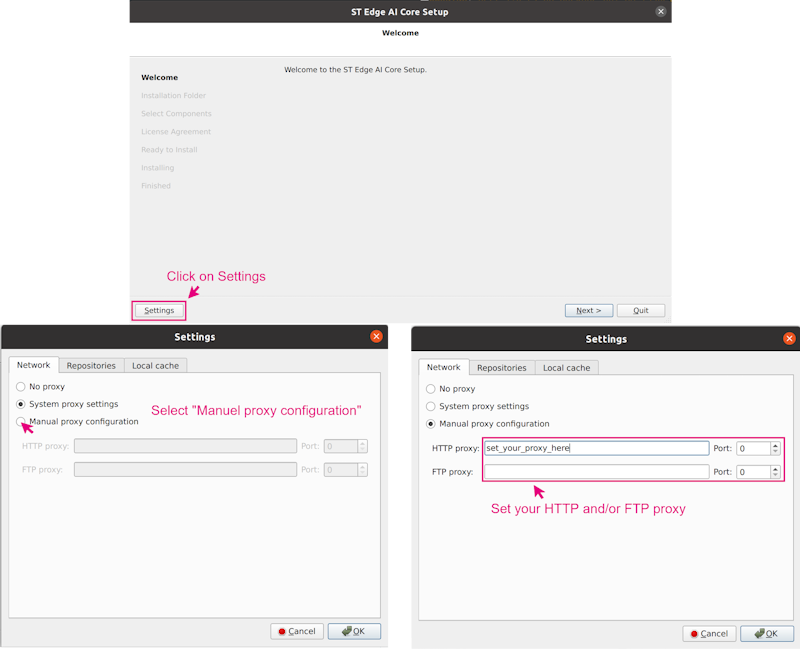

If you need to setup specific proxy settings, please follow the step by step procedure to setup the proxy:

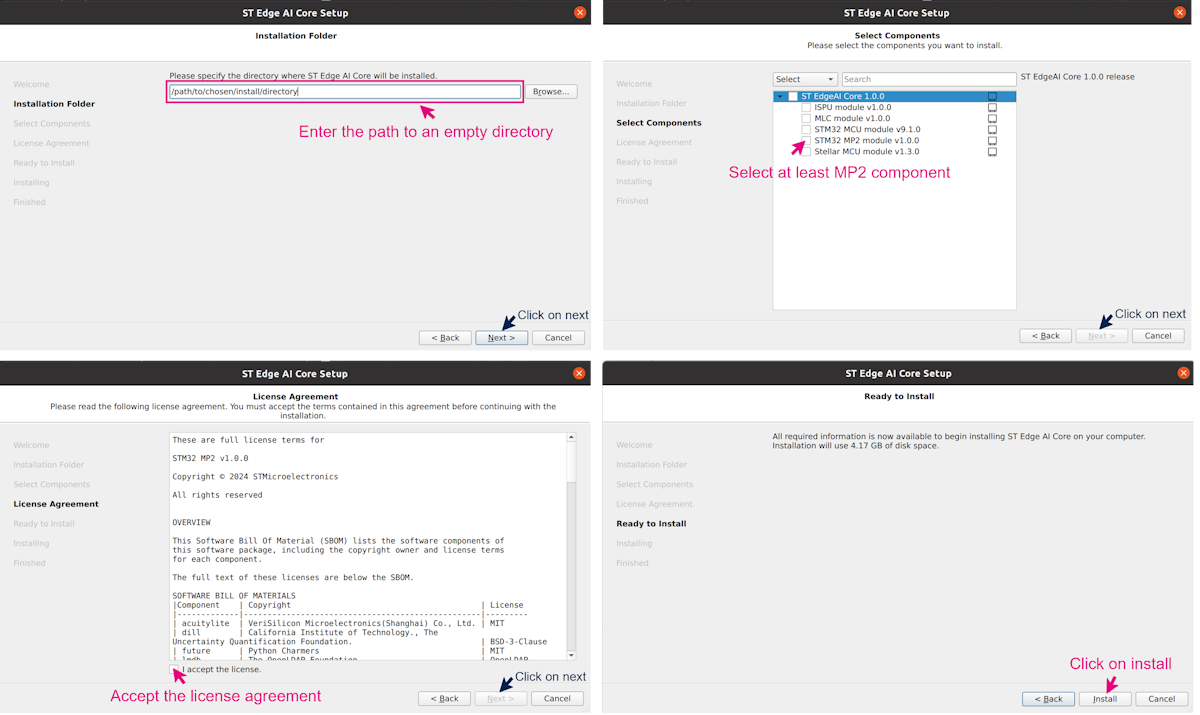

Then, follow the step by step procedure to install the tool:

| A maintenancetool is also installed, allowing to add, remove or update ST Edge AI components. |

The maintenancetool is an executable file located in your installation folder. When launched, it allows to add or remove a component, update an existing component (if an update is available), and uninstall the ST Edge AI tool.

3. How to use the tool[edit | edit source]

3.1. Script utilization[edit | edit source]

The main tool interface is the stedgeai binary. First, go to the binary directory:

cd <your_installation_path>/2.2/Utilities/linux

The features of the stedgeai binary for the stm32mp25 target are the following:

| The command "stedgeai --help" will print a lot of options but only the following options can impact the stm32mp25 target. |

usage: stedgeai --model FILE --target stm32mp25 [--workspace DIR] [--output DIR] [--no-report] [--no-workspace]

[--input-data-type float32] [--output-data-type float32] [--entropy ENTROPY] [--batch-size INT]

[--mode host|target|host-io-only|target-io-only] [--desc DESC] [--valinput FILE [FILE ...]]

[--valoutput FILE [FILE ...]] [--range MIN MAX [MIN MAX ...]] [--save-csv] [--classifier]

[--no-check] [--no-exec-model] [--seed SEED] [-h] [--version] [--tools-version]

[--verbosity [0|1|2|3]] [--quiet]

generate|validate

ST Edge AI Core v2.2.0 (STM32MP2 2.2.0)

command:

generate|validate must be the first argument (default: generate)

validate

validate the converted model by itself or versus the original model

generate

generate the converted model for the target device

common options:

--model FILE, -m FILE

paths of the original model files

--target stm32|stellar-e|ispu|mlc

target/device selector

...

--workspace DIR, -w DIR

workspace folder to use (default: st_ai_ws)

--output DIR, -o DIR folder where the generated files are saved (default: st_ai_output)

...

--input-data-type float32

indicate the expected inputs data type of the generated model

Multiple inputs: in_data_type_1,in_data_type_2,...

If one data type is given, it will be applied for all inputs

--output-data-type float32

indicate the expected outputs data type of the generated model

Multiple outputs: out_data_type_1,out_data_type_2,...

If one data type is given, it will be applied for all outputs

...

specific generate options:

--entropy ENTROPY requested entropy when converting per-channel model to per-tensor NBG model

specific validate options:

--batch-size INT, -b INT

number of samples for the validation

--mode host|target|host-io-only|target-io-only

validation mode to use

--desc DESC, -d DESC

COM port and baud rate to use for communication with the board. Syntax:

serial[:COMPORT][:baudrate]

--valinput FILE [FILE ...], -vi FILE [FILE ...]

files containing data to use as input for validation

--valoutput FILE [FILE ...], -vo FILE [FILE ...]

files containing data to use as reference output for validation

--range MIN MAX [MIN MAX ...]

range of values to generate the random input data (default: [0 1])

--save-csv force the storage of all io data in csv files

--classifier specify that the model is a classifier (otherwise auto-detection is performed)

--no-check disable internal checks (mode/model type dependent)

--no-exec-model disable execution of the original model

--seed SEED

additional options:

--no-report do not generate the report file

--no-workspace do not create the workspace folder

-h, --help show this help message and exit (use --target to get target specific help)

--version print the version of the tool

--tools-version print the versions of the third party packages used by the tool

--verbosity [0|1|2|3], -v [0|1|2|3], --verbose [0|1|2|3]

set verbosity level

--quiet disable the progress-bar

examples:

stedgeai analyze mode is not currently supported

stedgeai generate --target <target> -m myquantizedmodel.tflite

stedgeai validate --target <target> -m mymodel.tflite --mode target

3.2. Using generate command[edit | edit source]

To generate a NBG model, you need to provide a quantized TensorFlow™ Lite or ONNX™ model, and select the correct target: stm32mp25.

| The model type is not required, since the tool recognizes it automatically. |

3.2.1. Generate NBG from TensorFlow™ Lite or ONNX™[edit | edit source]

To convert a TensorFlow™ Lite model to NBG:

./stedgeai generate -m path/to/tflite/model --target stm32mp25

To convert an ONNX™ model to NBG:

./stedgeai generate -m path/to/onnx/model --target stm32mp25

This command generates two files: the .nb file, which is the NBG model, and a .txt file, which is the report of the generate command.

- The .nb file is located in the output path specified by the --output option. Else by default, the .nb file is located in the st_ai_output default directory.

- The report (with the extension report_modelName_stm32mp25.txt) is located in the workspace folder specified by the --workspace option. Else by default, the report is located in the st_ai_ws default directory.

3.2.2. Generate NBG from TensorFlow™ Lite or ONNX™ without report[edit | edit source]

To generate a NBG model without the report, add the --no-report option in your command line.

To convert a TensorFlow™ Lite model to NBG without the report:

./stedgeai generate -m path/to/tflite/model --target stm32mp25 --no-report

To convert an ONNX™ model to NBG without the report:

./stedgeai generate -m path/to/onnx/model --target stm32mp25 --no-report

This command generates a .nb file located in the output folder. If you use the option --output to specify a directory, the NBG is located inside, else the default output folder is st_ai_output.

3.2.3. Generate NBG from TensorFlow™ Lite or ONNX™ with float I/O[edit | edit source]

To generate a NBG model with float32 input and output or float32 output, add the --input-data-type and --output-data-type in your command line.

| The argument --input-data-type float32 will have no effect if the --output-data-type is not set to float32. However, the --output-data-type float32 can be used alone to generate a model with float32 output. |

To convert a model to NBG with float32 I/O:

./stedgeai generate -m path/to/TfliteorOnnx/model --target stm32mp25 --input-data-type float32 --output-data-type float32

To convert a model to NBG with float32 output only:

./stedgeai generate -m path/to/TfliteorOnnx/model --target stm32mp2 --output-data-type float32

This command generates a .nb file located in the output folder. If you use the option --output to specify a directory, the NBG is located inside, else the default output folder is st_ai_output.

3.3. Using validate command[edit | edit source]

Once the model is converted to NBG with the ST Edge AI generate command, you can verify if the NBG outputs are similar to the former TensorFlow™ Lite or ONNX™ model.

To validate a model on an STM32MPU platform, you need to have an STM32MP25 board connected to your host Linux PC. There are two ways to do it:

- The PC should be connected to the board with a USB-C/USB-C, USB-A/USB-C cable on the USB-C DRD port of the STM32MP25. This connection will create an Ethernet connection via USB with the following IP adress: 192.168.7.1. - Or, the STM32MP2 must be connected to internet and must have an IP address.

The validate command will send the model to the board and run the inference directly on the CPU or on the GPU/NPU, depending on the options passed as arguments. In parallel, the same model will run on the host PC, and the results of the two executions will be compared.

A set of metrics is returned:

acc : Accuracy (class, axis=-1) rmse : Root Mean Squared Error mae : Mean Absolute Error l2r : L2 relative error mean : Mean error std : Standard deviation error nse : Nash-Sutcliffe efficiency criteria, bigger is better, best=1, range=(-inf, 1] cos : COsine Similarity, bigger is better, best=1, range=(0, 1]

This allows to find out if the model executed on the board has similar results to the one on the host PC.

A descriptor -d/--desc argument is mandatory to run the validate mode. The different options of the descriptor are separated by the : character. On STM32MPUs, the descriptor can contain 4 values:

- mpu : this select the execution of the STM32MPUs pass. This is the only mandatory option.

- <ip_address> : by default the IP address is set to 192.168.7.1 which is the address given when the board is connected to a host PC using USB type C DRD port.

- cpu : this allow the user to choose between CPU or GPU/NPU execution

- model_path : (Optional) It is possible to pass the model path as argument, else the model path is automatically recovered from '-m/--model' option

./stedgeai validate -m path/to/TfliteorOnnx/model --target stm32mp2 --mode target --desc mpu:<your_ip_addr>:npu

This is the expected output:

ST Edge AI Core v2.2.0 Setting validation data... generating random data, size=10, seed=42, range=(0, 1) I[1]: (10, 128, 128, 3)/float32, min/max=[-0.999996, 0.992184], mean/std=[-0.003425, 0.574852] c/I[1] conversion [Q(0.00781250,128)]-> (10, 128, 128, 3)/uint8, min/max=[0, 255], mean/std=[127.562195, 73.582368] m/I[1] conversion [Q(0.00781250,128)]-> (10, 128, 128, 3)/uint8, min/max=[0, 255], mean/std=[127.562195, 73.582368] no output/reference samples are provided Creating c (debug) info json file <pwd>/stedgeai/install/2.2/Utilities/linux/stm32ai_output/network_c_info.json Exec/report summary (validate) -------------------------------------------------------------------------------------------------------------------------------------------------- model file : <pwd>/to/mobilenet_v1_0.5_128_quant.tflite type : tflite c_name : network options : allocate-inputs, allocate-outputs optimization : balanced target/series : stm32mp2 workspace dir : <pwd>/stedgeai/install/2.2/Utilities/linux/stm32ai_ws output dir : <pwd>/stedgeai/install/2.2/Utilities/linux/stm32ai_output model_fmt : sa/ua per tensor model_name : mobilenet_v1_0_5_128_quant model_hash : 0x26d5bf6ff386487dae44d80ad833667b params # : 1,326,633 items (1.28 MiB) -------------------------------------------------------------------------------------------------------------------------------------------------- input 1/1 : 'input', uint8(1x128x128x3), 48.00 KBytes, QLinear(0.007812500,128,uint8), activations output 1/1 : 'nl_30_0_conversion', uint8(1x1001), 1001 Bytes, QLinear(0.003906250,0,uint8), activations macc : 49,194,388 weights (ro) : 1,346,052 B (1.28 MiB) (1 segment) / -3,960,480(-74.6%) vs float model activations (rw) : 134,240 B (131.09 KiB) (1 segment) * ram (total) : 134,240 B (131.09 KiB) = 134,240 + 0 + 0 -------------------------------------------------------------------------------------------------------------------------------------------------- (*) 'input'/'output' buffers are allocated in the activations buffer Running the TFlite model... PASS: 86%|█████████████████████████████████████████████████████████████████████████████████ | 157/183 [00:04<00:00, 41.69it/s]INFO: Created TensorFlow Lite XNNPACK delegate for CPU. Running the ST.AI c-model (AI RUNNER)...(name=network, mode=TARGET) Target inference running on NPU using NBG model: ./stm32ai_output/mobilenet_v1_0.5_128_quant.nb ETHERNET:<ip_addr>3:./stm32ai_output/mobilenet_v1_0.5_128_quant.nb:connected ['network'] Summary 'network' - ['network'] --------------------------------------------------------------------------------------------------- I[1/1] 'input_0_' : uint8[1,128,128,3], 49152 Bytes, QLinear(0.007812500,128.0,uint8), None O[1/1] 'output_0_' : uint8[1,1001], 1001 Bytes, QLinear(0.003906250,0.0,uint8), None n_nodes : None activations : [] weights : [] macc : 0 compile_datetime : <undefined> --------------------------------------------------------------------------------------------------- protocol : <undefined> tools : ST.AI v6.1.0 runtime lib : v6.1.0 capabilities : IO_ONLY device.desc : MP2 VSI NPU --------------------------------------------------------------------------------------------------- Warning: C-network signature checking is skipped on STM32 MPU ST.AI Profiling results v2.0 - "network" ------------------------------------------------------------ nb sample(s) : 10 duration : 4.535 ms by sample (4.204/7.150/0.872) macc : 0 ------------------------------------------------------------ Statistic per tensor ----------------------------------------------------------------------------- tensor # type[shape]:size min max mean std name ----------------------------------------------------------------------------- 10 u8[1,128,128,3]:49152 0 255 127.562 73.582 10 u8[1,1001]:1001 0 184 0.237 3.397 ----------------------------------------------------------------------------- Saving validation data... output directory: <pwd>/stedgeai/install/2.2/Utilities/linux/stm32ai_output creating <pwd>/stedgeai/install/2.2/Utilities/linux/stm32ai_output/network_val_io.npz m_outputs_1: (10, 1001)/uint8, min/max=[0, 181], mean/std=[0.237862, 3.364958], nl_30_0_conversion c_outputs_1: (10, 1, 1, 1001)/uint8, min/max=[0, 184], mean/std=[0.236663, 3.397366], scale=0.003906250 zp=0, nl_30_0_conversion Computing the metrics... Cross accuracy report #1 (reference vs C-model) ---------------------------------------------------------------------------------------------------- notes: - r/uint8 data are dequantized with s=0.00390625 zp=0 - p/uint8 data are dequantized with s=0.00390625 zp=0 - ACC metric is not computed ("--classifier" option can be used to force it) - the output of the reference model is used as ground truth/reference value - 10 samples (1001 items per sample) acc=n.a. rmse=0.000732511 mae=0.000065559 l2r=0.055063117 mean=0.000005 std=0.000733 nse=0.996897 cos=0.998515 Evaluation report (summary) ---------------------------------------------------------------------------------------------------------------------------------------------------------------- Output acc rmse mae l2r mean std nse cos tensor ---------------------------------------------------------------------------------------------------------------------------------------------------------------- X-cross #1 n.a. 0.000732511 0.000065559 0.055063117 0.000005 0.000733 0.996897 0.998515 'nl_30_0_conversion', 10 x uint8(1x1001), m_id=[30] ---------------------------------------------------------------------------------------------------------------------------------------------------------------- acc : Accuracy (class, axis=-1) rmse : Root Mean Squared Error mae : Mean Absolute Error l2r : L2 relative error mean : Mean error std : Standard deviation error nse : Nash-Sutcliffe efficiency criteria, bigger is better, best=1, range=(-inf, 1] cos : COsine Similarity, bigger is better, best=1, range=(0, 1] Creating txt report file <pwd>/stedgeai/install/2.2/Utilities/linux/stm32ai_output/network_validate_report.txt elapsed time (validate): 41.593s

4. References[edit | edit source]