This article gives information about the Linux® Remote Processor Messaging (RPMsg) framework. The RPMsg framework is a virtio-based messaging bus that allows a local processor to communicate with remote processors available on the system.

1. Framework purpose[edit source]

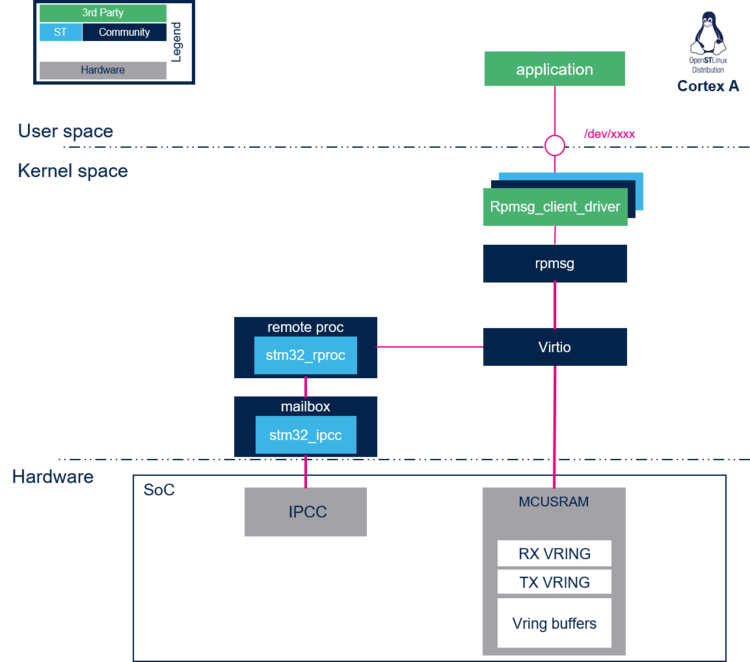

The Linux® RPMsg framework is a messaging mechanism implemented on top of the virtio framework[1][2] to communicate with a remote processor. It is based on virtio vrings to send/receive messages to/from the remote CPU over shared memory.

The vrings are uni-directional, one vring is dedicated to messages sent to the remote processor, and the other vring is used for messages received from the remote processor. In addition, shared buffers are created in memory space visible to both processors.

The Mailbox framework is then used to notify cores when new messages are waiting in the shared buffers.

Relying on these frameworks, The RPMsg framework implements a communication based on channels. The channels are identified by a textual name and have a local (“source”) RPMsg address, and a remote (“destination”) RPMsg address.

On the remote processor side, a RPMsg framework must also be implemented. Several solutions exist, we recommend using OpenAMP

Overviews of the communication mechanisms involved are available at:

2. System overview[edit source]

2.1. Component description[edit source]

- remoteproc: The remoteproc framework allows different platforms/architectures to control (power on, load firmware, power off) the remote processors. This framework also adds rpmsg virtio devices for remote processors that support the RPMsg protocol. More details on this framework are available in the remote proc framework page.

- virtio: VirtIO framework that supports virtualization. It provides an efficient transport layer based on a shared ring buffer (vring). For more details about this framework please refer to the link below:

- rpmsg: A virtio-based messaging bus that allows kernel drivers to communicate with remote processors available on the system. It provides the messaging infrastructure, facilitating the writing of wire-protocol messages by client drivers. Client drivers can then, in turn, expose appropriate user space interfaces if needed.

- rpmsg_client_driver is the client driver that implements a service associated to the remote processor. This driver is probed by the RPMsg framework when an associated service is requested by a remote processor using a "new service announcement" RPMsg message.

2.2. RPMsg definitions[edit source]

To implement an RPMsg client, channel and endpoint concepts need to be understood for a good understanding of the framework.

- RPMsg channel:

An RPMsg client is associated to a communication channel between master and remote processors. This RPMsg client is identified by the textual service name, registered in the RPMsg framework. The communication channel is established when a match is found between the local service name registered and the remote service announced.

- RPMsg endpoint:

The RPMsg endpoints provide logical connections through an RPMsg channel. An RPMsg endpoint has a unique source address and associated call back function, allowing the user to bind multiple endpoints on the same channel. When a client driver creates an endpoint with the local address, all the inbound messages with a destination address equal to the endpoint local address are routed to that endpoint. Notice that every channel has a default endpoint, which enables applications to communicate without even creating new endpoints.

2.3. API description[edit source]

The User API usage is described in Linux kernel RPMsg documentation [5]

3. Configuration[edit source]

3.1. Kernel configuration[edit source]

The RPMsg framework is automatically enabled when the remote STM32_RPROC configuration is activated.

3.2. Device tree configuration[edit source]

No device tree configuration is needed. The memory region allocated for RPMsg buffers is declared in the remote proc framework device tree.

4. How to use the framework[edit source]

The RPMsg framework is used by linux driver client. For details and an example of a simple client, please refer to associated Linux documentation [5]

4.1. RPMsg TTY[edit source]

The rpmsg_tty driver[6] simulates a serial link for communication between the host processor running Linux and the coprocessor. It allows the user applications to communicate with the coprocessor thanks to a standard TTY sysfs device interface.

- Linux kernel configuration: RPMSG_TTY

Device Drivers --->

Character devices --->

<*> RPMSG tty driver

- RPMSg channel name : "rpmsg_tty",

- User space interface: "/dev/ttyRPMSG<X>", where <X> is the instance number from 0 to 32.

- Application sample:

- Basic functions:

- open:

int openTtyRpmsg(int ttyNb)

{

char devName[50];

sprintf(devName, "/dev/ttyRPMSG%d", ttyNb);

FdRpmsg = open(devName, O_RDWR | O_NOCTTY | O_NONBLOCK);

if (FdRpmsg < 0) {

printf(" Error opening ttyRPMSG%d, err=-%d\n", ttyNb, errno);

return (errno * -1);

}

}

- close:

int closeTtyRpmsg(int FdRpmsg)

{

close(FdRpmsg);

return 0;

}

- write:

int writeTtyRpmsg(int FdRpmsg, int len, char* pData)

{

int result = 0;

if (FdRpmsg < 0) {

printf("CA7 : Error writing ttyRPMSG, fileDescriptor is not set\n");

return FdRpmsg;

}

result = write(FdRpmsg, pData, len);

return result;

}

- read:

int readTtyRpmsg(int FdRpmsg, int len, char* pData)

{

int byte_rd, byte_avail;

int result = 0;

if (FdRpmsg < 0) {

printf("CA7 : Error reading ttyRPMSG, fileDescriptor is not set\n");

return FdRpmsg;

}

ioctl(FdRpmsg, FIONREAD, &byte_avail);

if (byte_avail > 0) {

if (byte_avail >= len) {

byte_rd = read(FdRpmsg, pData, len);

} else {

byte_rd = read(FdRpmsg, pData, byte_avail);

}

result = byte_rd;

} else {

result = 0;

}

return result;

}

- control:

int ControlTtyRpmsg(int FdRpmsg, int modeRaw)

{

struct termios tiorpmsg;

/* get current port settings */

tcgetattr(FdRpmsg,&tiorpmsg);

if (modeRaw) {

/* Terminal controls are deactivated, used to transfer raw data without control */

memset(&tiorpmsg, 0, sizeof(tiorpmsg));

tiorpmsg.c_cflag = (CS8 | CLOCAL | CREAD);

tiorpmsg.c_iflag = IGNPAR;

tiorpmsg.c_oflag = 0;

tiorpmsg.c_lflag = 0;

tiorpmsg.c_cc[VTIME] = 0;

tiorpmsg.c_cc[VMIN] = 1;

cfmakeraw(&tiorpmsg);

} else {

/* ECHO off, other bits unchanged */

tiorpmsg.c_lflag &= ~ECHO;

/*do not convert LF to CR LF */

tiorpmsg.c_oflag &= ~ONLCR;

}

if (tcsetattr(FdRpmsg, TCSANOW, &tiorpmsg) < 0) {

printf("Error %d in %s tcsetattr", errno, __func__);

return (errno * -1);

}

return 0;

}

- For more examples, please refer to:

- the "OpenAMP_TTY_echo" application example in the list of available projects,

- the How to exchange data buffers with the coprocessor article that provides source code example for the direct buffer exchange mode.

4.2. RPMsg char[edit source]

The rpmsg_char driver[7] allows user applications to create local endpoints with a char device sysfs interface to communicate with a predefined remote processor endpoint (predefined means with a known endpoint address).

- Linux kernel configuration: RPMSG_CHAR

Device Drivers --->

Rpmsg drivers --->

<*> RPMSG device interface

- RPMSg channel name : None, the rpmsg_char implements only endpoint to endpoint communication with predefined addresses

- User space interface:

- "/dev/rpmg_ctrl0" to instantiate /dev/rpmsg0 sysfs device interface,

- "/dev/rpmg0" sysfs device interface for communication.

- Application sample:

- create local endpoint

struct rpmsg_endpoint_info {

char name[32];

uint32_t src;

uint32_t dst;

};

#define RPMSG_CREATE_EPT_IOCTL _IOW(0xb5, 0x1, struct rpmsg_endpoint_info)

/*

* argv[1] : "/dev/rpmsg_ctrl<X>", where <X> is the instance number of the device

* argv[2] : a name for the device

* argv[3] : local endpoint address

* argv[4] : remote endpoint address to communicate with

*/

int createEndPoint(int argc, char **argv)

{

struct rpmsg_endpoint_info ept;

int ret;

int fd;

char *endptr;

if (argc != 5)

exit(1);

fd = open(argv[1], O_RDWR);

if (fd < 0)

err(1, "failed to open %s\n", argv[1]);

strncpy(ept.name, argv[2], sizeof(ept.name));

ept.name[sizeof(ept.name)-1] = '\0';

ept.src = strtoul(argv[3], &endptr, 10);

if (*endptr || ept.src <= 0)

exit(1);

ept.dst = strtoul(argv[4], &endptr, 10);

if (*endptr || ept.src <= 0)

exit(1);

ret = ioctl(fd, RPMSG_CREATE_EPT_IOCTL, &ept);

if (ret < 0) {

fprintf(stderr, "failed to create endpoint");

exit(1);

}

close(fd);

return 0;

}

- write and read on the local endpoint

/*

* argv[1] : "/dev/rpmsg<X>", where <X> is the instance number of the device

*/

int ping(int argc, char **argv)

{

char buffer[256];

int ret;

int fd;

if (argc != 2 )

exit(1);

fd = open(argv[1], O_RDWR);

if (fd < 0)

err(1, "failed to open %s\n", argv[1]);

sprintf(buffer, "ping %s", argv[1]);

ret = write(fd, buffer,strlen(buffer));

if (ret < 0) {

fprintf(stderr, "failed to write endpoint %s", argv[1]);

exit(1);

}

ret = read(fd, buffer,256);

if (ret < 0) {

fprintf(stderr, "failed to read endpoint %s", argv[1]);

exit(1);

}

fprintf(stderr, "message received: \"%s\"\n", buffer);

close(fd);

return 0;

}

- remove local endpoint

/*

* argv[1] : "/dev/rpmsg<X>", where <X> is the instance number of the device

*/

int destroyEndPoint(int argc, char **argv)

{

int ret;

int fd;

if (argc != 2)

exit(1);

fd = open(argv[1], O_RDWR);

if (fd < 0)

err(1, "failed to open %s\n", argv[1]);

ret = ioctl(fd, RPMSG_DESTROY_EPT_IOCTL, NULL);

if (ret < 0) {

fprintf(stderr, "failed to destroy endpoint");

exit(1);

}

close(fd);

return 0;

}

5. How to trace and debug the framework[edit source]

5.1. How to trace[edit source]

RPMsg and virtio dynamic debug traces can be added using the following commands:

echo -n 'file virtio_rpmsg_bus.c +p' > /sys/kernel/debug/dynamic_debug/control echo -n 'file virtio_ring.c +p' > /sys/kernel/debug/dynamic_debug/control

6. References[edit source]