1. STM32Cube.AI enabled OpenMV firmware

This tutorial walks you through the process of integrating your own neural network into the OpenMV environment.

The OpenMV open-source project provides the source code for compiling the OpenMV H7 firmware with STM32Cube.AI enabled.

The process for using STM32Cube.AI with OpenMV is described in the following figure.

- Train your neural network using your favorite deep learning framework.

- Convert your trained network to optimized C code using STM32Cube.AI tool

- Download the OpenMV firmware source code, and

- Add the generated files to the firmware source code

- Compile with GCC toolchain

- Flash the board using OpenMV IDE

- Program the board with microPython and perform inference

1.1. Prerequisites

To follow this article it is assumed that a Linux environment is used (tested with Ubuntu 18.04).

All the commands starting with this syntax should be executed in a Linux console:

<mycommand>

1.2. Requirements

1.2.1. Check that your environment is up-to-date

sudo apt update sudo apt upgrade sudo apt install git zip make build-essential tree

1.2.2. Create your workspace directory

mkdir $HOME/openmv_workspace

1.2.3. Install the stm32ai command line to generate the optimized code

- Download the latest version of the X-CUBE-AI-Linux from ST website into your openmv_workspace directory.

- Extract the archive

cd $HOME/openmv_workspace chmod 644 en.en.x-cube-ai-v6-0-0-linux.zip unzip en.en.x-cube-ai-v6-0-0-linux.zip mv STMicroelectronics.X-CUBE-AI.6.0.0.pack STMicroelectronics.X-CUBE-AI.6.0.0.zip unzip STMicroelectronics.X-CUBE-AI.6.0.0.zip -d X-CUBE-AI.6.0.0 unzip stm32ai-linux-6.0.0.zip -d X-CUBE-AI.6.0.0/Utilities

- Add the stm32ai command line to your PATH.

export PATH=$HOME/openmv_workspace/X-CUBE-AI.6.0.0/Utilities/linux:$PATH

- You can verify that the stm32ai command line is properly installed:

stm32ai --version stm32ai - Neural Network Tools for STM32AI v1.4.1 (STM.ai v6.0.0-RC6)

1.2.4. Install the GNU Arm toolchain version 7-2018-q2 to compile the firmware

sudo apt remove gcc-arm-none-eabi sudo apt autoremove sudo -E add-apt-repository ppa:team-gcc-arm-embedded/ppa sudo apt update sudo -E apt install gcc-arm-embedded

Alternatively, you can download the toolchain directly from ARM with

wget https://armkeil.blob.core.windows.net/developer/Files/downloads/gnu-rm/7-2018q2/gcc-arm-none-eabi-7-2018-q2-update-linux.tar.bz2 tar xf gcc-arm-none-eabi-7-2018-q2-update-linux.tar.bz2

and add the path to gcc-arm-none-eabi-7-2018-q2-update/bin/ to your PATH environment variable

You can verify that the GNU Arm toolchain is properly installed:

arm-none-eabi-gcc --version arm-none-eabi-gcc (GNU Tools for Arm Embedded Processors 7-2018-q2-update) 7.3.1 20180622 (release) [ARM/embedded-7-branch revision 261907] Copyright (C) 2017 Free Software Foundation, Inc. This is free software; see the source for copying conditions. There is NO warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

1.2.5. Check Python version

Make sure that when you type python --version in your shell, the version is Python 3.x.x. If not, create the symbolic link:

sudo ln -s /usr/bin/python3.8 /usr/bin/python

1.2.6. Install the OpenMV IDE

Download OpenMV IDE from OpenMV website.

OpenMV IDE is used to develop microPython scripts and to flash the board.

1.3. Step 1 - Download and prepare the OpenMV project

In this section we clone the OpenMV project, checkout a known working version and create a branch.

Then we initialize the git submodules. This clones the OpenMV dependencies, such as microPython.

1.3.1. Clone the OpenMV project

cd $HOME/openmv_workspace git clone --recursive https://github.com/openmv/openmv.git

1.3.2. Checkout a known working version

cd openmv git checkout v3.9.4

1.4. Step 2 - Add the STM32Cube.AI library to OpenMV

Now that the OpenMV firmware is downloaded, we need to copy over the STM32Cube.AI runtime library and header files into the OpenMV project.

cd $HOME/openmv_workspace/openmv/src/stm32cubeai

mkdir -p AI/{Inc,Lib}

mkdir data

Then copy the files from STM32Cube.AI to the AI directory:

cp $HOME/openmv_workspace/X-CUBE-AI.6.0.0/Middlewares/ST/AI/Inc/* AI/Inc/ cp $HOME/openmv_workspace/X-CUBE-AI.6.0.0/Middlewares/ST/AI/Lib/GCC/STM32H7/NetworkRuntime*_CM7_GCC.a AI/Lib/NetworkRuntime_CM7_GCC.a

After this operation, the AI directory should look like this

AI/ ├── Inc │ ├── ai_common_config.h │ ├── ai_datatypes_defines.h │ ├── ai_datatypes_format.h │ ├── ai_datatypes_internal.h │ ├── ai_log.h │ ├── ai_math_helpers.h │ ├── ai_network_inspector.h │ ├── ai_platform.h │ ├── ... ├── Lib │ └── NetworkRuntime_CM7_GCC.a └── LICENSE

1.5. Step 3 - Generate the code for a NN model

In this section, we train a convolutional neural network to recognize hand-written digits.

Then we generate a STM32 optimized C code for this network thanks to STM32Cube.AI.

These files will be added to OpenMV firmware source code.

1.5.1. Train a convolutional neural network

The convolutional neural network for digit classification (MNIST) from Keras will be used as an example. If you want to train the network, you need to have Keras installed.

To train the network and save the model to the disk, run the following commands:

cd $HOME/openmv_workspace/openmv/src/stm32cubeai/example python3 mnist_cnn.py

1.5.2. STM32 optimized code generation

To generate the STM32 optimized code, use the stm32ai command line tool as follows:

cd $HOME/openmv_workspace/openmv/src/stm32cubeai stm32ai generate -m example/mnist_cnn.h5 -o data/

The following files are generated in $HOME/openmv_workspace/openmv/src/stm32cubeai/data:

* network.h * network.c * network_data.h * network_data.c

1.5.3. Preprocessing

If you need to do some special preprocessing before running the inference, you must modify the function ai_transform_input located into src/stm32cubeai/nn_st.c . By default, the code does the following:

- Simple resizing (subsampling)

- Conversion from unsigned char to float

- Scaling pixels from [0,255] to [0, 1.0]

The provided example might just work out of the box for your application, but you may want to take a look at this function.

1.6. Step 4 - Compile

1.6.1. Build MicroPython cross-compiler

MicroPython cross-compiler is used to pre-compile Python scripts to .mpy files which can then be included (frozen) into the firmware/executable for a port. To build mpy-cross use:

cd $HOME/openmv_workspace/openmv/src/micropython/mpy-cross make

1.6.2. Build the firmware

- Lower the heap section in RAM allowing more space for our neural network activation buffers.

- For OpenMV H7: Edit src/omv/boards/OPENMV4/omv_boardconfig.h, find OMV_HEAP_SIZE and set to 230K.

- For OpenMV H7 Plus: Edit src/omv/boards/OPENMV4P/omv_boardconfig.h, find OMV_HEAP_SIZE and set to 230K.

- Execute the following command to compile:

- For OpenMV H7, it is the default target board, no need to define TARGET

cd $HOME/openmv_workspace/openmv/src/ make clean make CUBEAI=1

- For OpenMV H7 Plus, add the TARGET=OPENMV4P to make

make TARGET=OPENMV4P CUBEAI=1

1.7. Step 5 - Flash the firmware

- Plug the OpenMV camera to the computer using a micro-USB to USB cable.

- Open OpenMV IDE

- From the toolbar select Tools > Run Bootloader

- Select the firmware file (It is located in openmv/src/build/bin/firmware.bin) and follow the instructions

- Once this is done, you can click the Connect button located at the bottom left of the IDE window

1.8. Step 6 - Program with microPython

- Open OpenMV IDE, and click the Connect button located at the bottom left of the IDE window

- Create a new microPython script File > New File

- You can start from this example script running the MNIST neural network we have embedded in the firmware

'''

Copyright (c) 2019 STMicroelectronics

This work is licensed under the MIT license

'''

# STM32Cube.AI on OpenMV MNIST Example

import sensor, image, time, nn_st

sensor.reset() # Reset and initialize the sensor.

sensor.set_contrast(3)

sensor.set_brightness(0)

sensor.set_auto_gain(True)

sensor.set_auto_exposure(True)

sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to Grayscale

sensor.set_framesize(sensor.QQQVGA) # Set frame size to 80x60

sensor.skip_frames(time = 2000) # Wait for settings take effect.

clock = time.clock() # Create a clock object to track the FPS.

# [STM32Cube.AI] Initialize the network

net = nn_st.loadnnst('network')

nn_input_sz = 28 # The NN input is 28x28

while(True):

clock.tick() # Update the FPS clock.

img = sensor.snapshot() # Take a picture and return the image.

# Crop in the middle (avoids vignetting)

img.crop((img.width()//2-nn_input_sz//2,

img.height()//2-nn_input_sz//2,

nn_input_sz,

nn_input_sz))

# Binarize the image

img.midpoint(2, bias=0.5, threshold=True, offset=5, invert=True)

# [STM32Cube.AI] Run the inference

out = net.predict(img)

print('Network argmax output: {}'.format( out.index(max(out)) ))

img.draw_string(0, 0, str(out.index(max(out))))

print('FPS {}'.format(clock.fps())) # Note: OpenMV Cam runs about half as fast when connected

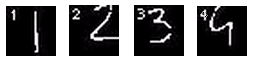

Take a white sheet of paper and draw numbers with a black pen, point the camera towards the paper. The code must yield the following output:

2. Documentation of microPython STM32Cube.AI wrapper

This section provides information about the 2 microPython functions added the the OpenMV microPython framework in order to be able to initialize and run STM32Cube.AI optimized neural network inference.

2.1. loadnnst

nn_st.loadnnst(network_name)

Initialize the network named network_name.

Arguments:

- network_name : String, usually 'network'

Returns:

- A network object, used to make predictions

Example:

import nn_st

net = nn_set.loadnnst('network')

2.2. predict

out = net.predict(img)

Runs a network prediction with img as input.

Arguments:

- img : Image object, from the image module of nn_st. Usually taken from sensor.snapshot()

Returns:

- Network predictions as an python list

Example:

'''

Copyright (c) 2019 STMicroelectronics

This work is licensed under the MIT license

'''

import sensor, image, nn_st

# Init the sensor

sensor.reset()

sensor.set_pixformat(sensor.RGB565)

sensor.set_framesize(sensor.QVGA)

# Init the network

net = nn_st.loadnnst('network')

# Capture a frame

img = sensor.snapshot()

# Do the prediction

output = net.predict(img)