1. STEdgeAI-Core documentation

STEdgeAI-Core is a free-of-charge desktop tool to evaluate, optimize and compile edge AI models for multiple ST products, including microcontrollers, microprocessors, and smart sensors with ISPU and MLC. It is available as a command-line interface (CLI) and it allows an automatic conversion of pretrained artificial intelligence algorithms. Including neural network and classical machine learning models, into the equivalent optimized C code to be embedded in the application. The generated optimized library offers an easy-to-use and developer-friendly way to deploy AI on edge devices. The tool offers several means to benchmark and validate artificial intelligence algorithms both on a personal workstation (Windows, Linux, Mac) or directly on the target ST platform. The STEdgeAI-Core documentation is available online and is also integrated into the tool itself.

2. X-CUBE-AI documentation

X-CUBE-AI is an STM32Cube Expansion Package based on STEdgeAI-Core technology. X-CUBE-AI is part of the STM32Cube.AI ecosystem. It extends STM32CubeMX capabilities with automatic conversion of pretrained artificial intelligence algorithms, including neural network and classical machine learning models. It integrates also a generated optimized library into the user's project. The easiest way to use X-CUBE-AI is to download it inside the STM32CubeMX tool (version 5.4.0 or newer). The X-CUBE-AI Expansion Package offers also several means to validate artificial intelligence algorithms both on a desktop PC and an STM32. With X-CUBE-AI, it is as well possible to measure performance on STM32 devices without any user handmade specific C code.

There are four main documentation items for X-CUBE-AI completed by WiKi articles:

- Documentation available on st.com:

- The release notes for each version available within X-CUBE-AI

- The embedded documentation available within the X-CUBE-AI Expansion Package corresponds to the STEdgeAI-Core documentation.

To access the embedded documentation, first install X-CUBE-AI. The installation process is described in the getting started user manual. Once the installation is done, the documentation is available in the installation directory under X-CUBE-AI/10.0.0/Documentation/index.html (adapt the example, given here for version 10.0.0, to the version used). For Windows®, by default, the documentation is located here (replace the string username by your Windows® username): file:///C:/Users/username/STM32Cube/Repository/Packs/STMicroelectronics/X-CUBE-AI/10.0.0/Documentation/index.html. The release notes are available here: file:///C:/Users/username/STM32Cube/Repository/Packs/STMicroelectronics/X-CUBE-AI/10.0.0/Release_Notes.html.

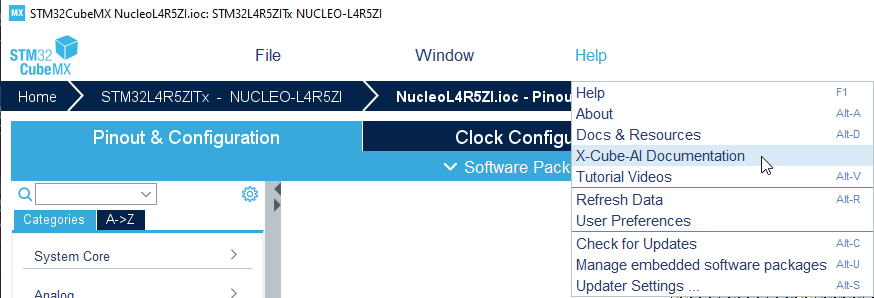

The embedded documentation can also be accessed through the STM32CubeMX UI, once the X-CUBE-AI Expansion Package has been selected and loaded, by clicking on the "Help" menu and then on "X-CUBE-AI documentation":