1. How to reduce audio latencies ?

Audio latencies provided in the STM32CubeWBA package are following recommendation of the BAP profile for being as much interoperable as possible. Especially we can notice that the presentation delay is quite high. Here are guidelines, applied to TMAP projects in media mode (unicast media receiver UMR and unicast media sender UMS), for changing each of them. Note that Audio Configuration, Codec Specific Configuration and QoS Configuration are defined in the BAP specification.

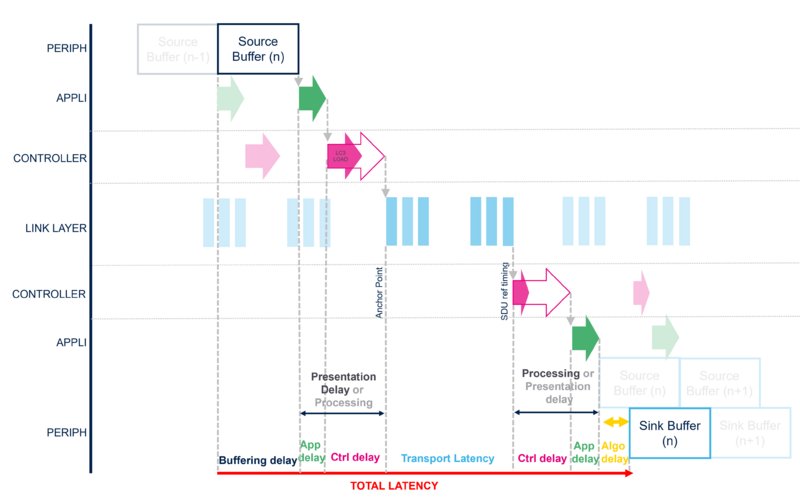

1.1. Buffering and algorithmic delays

Buffering delay and Algorithmic delay are linked to the codec used : the first one is corresponding to the frame duration, the second one is introduced inside the codec algorithm for overlapping frames and ensuring signal continuity. The mandatory LC3 codec mainly allows two configurations with sub latencies of 12.5ms (10ms frame duration) and 11.5ms (7.5 frame duration). 10ms of frame duration is the most common configuration and allows a better audio quality.

For switching to 7.5ms of frame duration, the server must support this configuration in its PAC, then we can tell the central to try this configuration, thus the following parameters must be updated :

| Parameter | Central | Peripheral |

|---|---|---|

| Codec frame duration | Change TARGET_MEDIA_STREAM_QOS_CONFIG to LC3_QOS_xx_y_z with y an odd value | Ensure a specific codec configuration allows this configuration or add it to a_mr_snk_pac_id[] in TMAPAPP_TMAPInit() : LC3_xx_y with y an odd value.

A PAC could also be added at the CAPAPP_Init() |

The parameter FrameBlockPerSdu could multiply buffering delay, but this parameters is already set to 1 in our demo

1.2. Processing delay (Central only)

Processing delay takes place on the central and is composed of the Controller delay and the Application delay.

The application delay is already set to the minimum value corresponding to the conversion time of the ADC (no audio processing at application level)

Since the client has no constraint of synchronization, the PREFFERED_CONTROLLER_DELAY define can be updated to a lower value and will be used if it falls within the local controller delay range. Note that the minimum value is corresponding to the CPU load of the codec for the requested configuration, plus the maximum duration that the codec can be interrupted during it's processing CODEC_PROC_MARGIN_US Additionally, if the application has a source ASE, then the radio preparation time CODEC_RF_SETUP_US is added.

1.3. Transport Latency

Transport latency corresponds to the reserved time for air transmission, and it increases when we try to reach higher quality of service. This value depends on number of CISes, RTN, packet size, PHY, number of channels... The application provides a maximum transport latency to the link layer that returns the real transport latency.

| Parameter | Details | Central | Peripheral |

|---|---|---|---|

| CIS number | Each CIS introduce TIFS and CIS offset | Default application try to split channels on several CIS. Can be forced in TMAPAPP_StartMediaStream() with the value ChannelPerCIS | From tmapp_app_conf.h, reduce APP_NUM_SNK_ASE to 1 sink ASE |

| Channel numbers | Each additional channel increase packet size | From tmap_app_conf.h, reduce APP_MAX_CHANNEL_PER_SNK_ASE to 1 channel | |

| Packet size | Duration of the packet directly depend on the size and the PHY | Depends on the LC3 configuration (frequency, frame duration, bitrate) and channels numbers per ASE.

Note that packet above 251 bytes are fragmented on air, adding more delays. | |

| Max transport latency and RTN | Increasing possibilities of retransmission increase the transport latency, even if the packet is successfully transmitted at the first tentative | Fields rtx_num and max_tp_latency of APP_QoSConf can be updated for the TARGET_MEDIA_STREAM_QOS_CONFIG.

The Retransmission Number can be set down to zero, but quality of service may be impacted a lot. Additionally the Max Transport Latency can be used to force the link layer to use only one event to transmit the packet by setting value equal to the SDU interval. |

Can force usage of the first part of the APP_QoSConf by forcing ENABLE_AUDIO_HIGH_RELIABILITY to 0 |

The application already uses 2M as default PHY to reduce bandwidth usage and power consumption.

1.4. Presentation delay (Peripheral side, managed by Central)

Presentation delay takes place at the peripheral but still negotiated and decided by the central since this peripheral may have constraint to play (or record) audio in sync with another peripheral.

| Parameter | Central | Peripheral |

|---|---|---|

| Presentation Delay | Field presentation_delay of APP_QoSConf can be updated to a lower value, however the applied value will be greater than the maximum of all minimum presentation delay provided by all peripherals. | Must reduce exposed presentation delay (~controller delay) as much as possible by either reducing the codec configuration (PAC) and the number of source and sink ASE: the minimum presentation delay computed by the audio stack is based on a forecast of the worst possible configuration, including ASE that may not go into streaming state and PAC recorded. Be careful to respect the mandatory configuration required by the profiles, otherwise consider a vendor specific profile |

2. What is the lowest latency we can achieve ?

2.1. Unicast Media use case

For the TMAP project, here are the changes done for reaching the minimum latency, with the following restrictions

- Audio is mono from central to peripheral

- Audio sampling frequency is 48kHz

- No requirement on the quality of service

- No application delay and latency measured on the digital interface

The central has been changed with :

- PREFFERED_CONTROLLER_DELAY set to 0 : application will take the minimum controller delay

- TARGET_MEDIA_STREAM_QOS_CONFIG set to LC3_QOS_48_3_1

- APP_QoSConf updated for the corresponding QOS with

- Max transport latency set to 8

- RTN set to 0

- presentation delay to 0 : the application will take the minimum presentation delay provided by the peripheral

- ACL interval of 30ms for being synchronized to the ISO interval and not creating conflict.

- Additionally, mastering both central and peripheral allows to go deeper in the optimization; by analyzing the Link Layer process and scheduling, we know the LC3 could only be interrupted for handling an ACL event and CODEC_PROC_MARGIN_US could be set down to 800us. Moreover, the radio preparation time CODEC_RF_SETUP_US can be measured to 1250us. This optimization could not be done with another client since the ISO to ACL offset may change.

The peripheral has been changed with :

- set APP_NUM_SNK_ASE and APP_MAX_CHANNEL_PER_SNK_ASE to 1

- APP_TMAP_ROLE remove CALL TERMINAL and APP_AUDIO_ROLE remove AUDIO_ROLE_SOURCE

- Additionally, because of this very specific configuration, and analyzing scheduling, we know the the LC3 is always delayed the time the current ISO event is post-processed and CODEC_PROC_MARGIN_US can be set to 400us

Enabling logs at the central allows to get all latencies, controller delay is always the local controller delay, while the remote controller delay is included to the presentation delay

| Processing delay - central | Presentation delay - peripheral | |||||||

|---|---|---|---|---|---|---|---|---|

| Use case | Buffering delay | Application delay | Controller delay | Transport latency C->P | Controller delay | Application delay | Algorithmic delay | Total delay |

| lowest | 7.5 ms | 0.0 ms | 4.593 ms | 0.63 ms | 2.154 ms | 0.1 ms | 4 ms | 18.977 ms |

| adding 1 RTN | 7.5 ms | 0.0 ms | 4.593 ms | 1.41 ms | 2.154 ms | 0.1 ms | 4 ms | 19.757 ms |

2.2. Unicast Telephony use case

For the TMAP project, here are the changes done for reaching the minimum latency, with the followings restrictions :

- Audio is mono bidirectional

- Audio sampling frequency is 16kHz

- No requirement on the quality of service

- No application delay and latency measured on the digital interface

The central has been changed with :

- PREFFERED_CONTROLLER_DELAY set to 0 : application will take the minimum controller delay

- TARGET_TELEPHONY_STREAM_QOS_CONFIG set to LC3_QOS_16_3_1

- APP_QoSConf updated for the corresponding QOS with

- RTN set to 0

- presentation delay to 0 : the application will take the minimum presentation delay provided by the peripheral

- ACL interval of 30ms for being synchronized to the ISO interval and not creating conflict.

- Additionally, mastering both central and peripheral allows to go deeper in the optimization; but this case is more complex because 2 ASEs are present (source and sink). The scheduling analysis shows the ACL event is placed before the ISO event leading to interrupt only the encoding processing, the decoding processing is much less interrupted, and both are never overlapping. While the audio library provided does not allow handle such specific case, the application can be patched :

- set CODEC_RF_SETUP_US to 1200us (measured)

- Set CODEC_PROC_MARGIN_US to 0us

- Manage margin at the application level inside the event CAP_UNICAST_AUDIO_DATA_PATH_SETUP_REQ_EVT by adding 700us to the controller_delay in case of direction is BAP_AUDIO_PATH_INPUT, 300us otherwise.

The peripheral has been changed with :

- APP_NUM_SNK_ASE and APP_NUM_SRC_ASE set to 1

- Ensure APP_MAX_CHANNEL_PER_SNK_ASE and APP_MAX_CHANNEL_PER_SRC_ASE are set to 1

- Additionally, because of this very specific configuration and scheduling, management of margin can be patched at the application level :

- set CODEC_RF_SETUP_US to 1200us (measured)

- Set CODEC_PROC_MARGIN_US to 0us

- Manage margin at the application level inside CAP_UNICAST_PREFERRED_QOS_REQ_EVT, the controller_delay_min can be forced to be computed without considering other ASEs (no overlapping in LC3 processing), and by adding margin depending on the ASE type; 700us for the source ASE and 400us for the sink ASE.

if (info->Type == ASE_SINK)

{

controller_delay_min = (num_snk_ases * info->SnkControllerDelayMin) + 400u;

}

else

{

controller_delay_min = (num_src_ases * info->SrcControllerDelayMin) + 700u;

}

Finally, we can compute and measure the following latencies:

| Use case | Buffering delay | Processing delay - central | Transport latency (both direction) | Presentation delay - peripheral | Algorithmic delay | Total delay | ||

|---|---|---|---|---|---|---|---|---|

| Application delay | Controller delay | Controller delay | Application delay | |||||

| path C to P | 7.5 ms | 0.0 ms | 3.349 ms | 0.51 ms | 1.086 ms | 0.1 ms | 4 ms | 16.545 ms |

| path P to C | 7.5 ms | 0.0 ms | 1.086 ms | 0.51 ms | 3.349 ms | 0.1 ms | 4 ms | 16.545 ms |

3. How to reduce RAM footprint for my use case ?

3.1. audio data path

The audio data path represents a major part of the RAM footprint, we can notice 3 main types of buffers that can be adapted depending on the application ; Audio input / output buffers, LC3 buffers and Codec Manager FIFO.

Here are guidelines for implementing a simple hearing aid supporting a mono audio receiver at 24kHz using a mono interface from the generic configuration in TMAP Central project.

- Audio in/out buffers must be sized depending on 4 parameters :

- audio role : a device supporting both sink and source role needs two separated buffers.

- audio frequency

- number of channels : on the audio interface (while I²S is stereo, SAI IP allows other configurations).

- sample depth : 16 bits samples are using 2 bytes while 24 and 32 bits samples are coded on 4 bytes.

These buffers must be at least duplicated for the ping pong between application and DMA. The TMAP central project supports stereo audio in/out up to 48kHz at 16bits per sample, meaning that up to 7680 bytes are used for that buffers (see SAI_SRC_MAX_BUFF_SIZE and SAI_SNK_MAX_BUFF_SIZE) while a simple hearing aid could allocate only 960 bytes.

- LC3 buffers are allocated for each session and channel and object size depend on the maximum frequency. The hearing aid could set CODEC_LC3_NUM_ENCODER_CHANNEL to 0 (saving the encoder footprint in flash memory) and LC3_DECODER_STRUCT_SIZE to 1. Also, setting the CODEC_MAX_BAND to CODEC_SSWB allows to get object sized for the limited to 24kHz. This simple configuration allows to save more than 24 kBytes compared to the generic provided TMAP central.

- Codec manager internal FIFO can be fit to reduce the supported controller delay range. Then CODEC_POOL_SUB_SIZE could be set to 480 for supporting 8 frames of 60 bytes per channel and aCodecPacketsMemory could be sized for only 1 channel, saving 3360 bytes. Indeed, the Codec Manager divide the given pool for creating MAX_CHANNEL subpools but will merge sub pools if needed at the data path creation.

3.2. audio stack

For a fine tuning of the RAM footprint, audio stack object could be adjusted as well : GAF (generic audio framework) configuration and GATT resources of the profiles. Please refer to section 3 of Bluetooth LE Audio - STM32WBA Architecture and Integration for more details.

Additionally the buffer given at the host stack initialization pInitParams.bleStartRamAddress could be adjusted, especially by checking NUM_LINKS parameters.

4. How to increase audio quality from 16 to 24 or 32bits per sample ?

Increasing sample depth on a data path makes sense only if both source and sink support it. The current audio specification does not handle this parameter so it must be forced or negotiated out of band.

The sample depth has only impact at the application level, from the audio peripheral to the LC3 codec. The LC3 codec itself already supports 24 or 32 bits samples and is configured using the hci configure data path command. The tradeoff of such update is an increasing of the power consumption due to higher clock rate on the SAI, and a bigger RAM footprint for audio in/out buffers.

Due to the 32bits architecture, a 24bits sample is handled as a 32bits sample, and some padding must be done. Here are modifications for switching to 24 bits format:

- If low power is used, check restriction on downclocking due to the higher SAI BCLK rate

- Configure the SAI to have 64 bits frames

- BSP_AUDIO_IN_Init() can be updated with

- mx_config.DataSize to SAI_DATASIZE_24

- mx_config.FrameLength to 64

- mx_config.ActiveFrameLength to 32

- Audio_Out_Ctx[0].BitsPerSample should be set to AUDIO_RESOLUTION_24B.

- This configuration is already supported for the BSP_AUDIO_OUT_Init()

- BSP_AUDIO_IN_Init() can be updated with

- Change input / output (aSrcBuff/aSnkBuff) buffer to uint32_t

- Configure the interface with the codec by updating param.SampleDepth, note that 24bits format require padding.

- Start the DMA with an appropriate len, since DMA is now configured to manage Words, BSP_AUDIO_IN_Record() should call the HAL drivers with NbrOfBytes / 4U and the application must be updated with bytes_per_sample equal 4

- Finally, at source, for each call to CODEC_SendData(), move the sign bit of the 24bits sample to match the expected format (zero padding)

uint32_t *ptr = &aSrcBuff[0]; //for half complete only, otherwise add AudioFrameSize/2

for (int32_t k=0 ; k < Source_frame_size/2 ; k++)

{

*ptr = (uint32_t)((int32_t)(*ptr<<8) / 256);

ptr++;

}

Tip : to save the division operation, it is possible to handle directly the 32 bits sample with param.SampleDepth set to 32

- At sink, the padding operation can be done inside the weak function CODEC_NotifyDataReady() called at each frame per ASE. If stereo is managed in 2 ASEs, this callback will be called two times with decoded_data shifted by one sample.

uint32_t *ptr = (uint32_t *)(decoded_data);

for (int32_t k=0 ; k < Sink_frame_size/4 ; k++) // loop len is twice if ASE is stereo

{

*ptr = (uint32_t)((int32_t)(*ptr)*256) >> 8;

ptr += 2; // increment is 1 if ASE is stereo

}

5. What are the parameters impacting the power consumption in audio application ?

When the application is in the audio streaming mode, the standby or stop low power mode can not be used. Indeed, SAI bus is streaming continuously and needs the audio clock from the PLL (P or Q output) to remain active, therefore, only sleep mode can be used.

Due to the average consumption in audio mode, it is recommended to enable the SMPS.

Also, it is more efficient to perform audio processing from the PLL to reduce the run mode time, and then decreasing the SYSCLK during sleep mode for reducing power consumption. Note that a minimum frequency is defined by some peripheral such as the SAI requiring APB2 clock to be at least twice as high as the bit clock signal frequency.

In audio mode, the average consumption of the STM32WBA depends on the following parameters

- Radio activity

- TX power; the impact is also depending on the quality link when autonomous power control feature is enabled. Note that an audio source has a longer TX time than a sink.

- ISO link parameters such as retransmission number (depending on link quality for CIS), PHY, packet size...

- CPU load & run duration

- mainly depending on the codec configuration : audio frequency, direction and numbers of channels

- Sleep consumption

- depending on all peripherals that must remain active during sleep : at least the GPDMA is performing transfers between RAM and SAI IP. Here the chip is running the PLL and VOS1 is necessary.

Some measurement showing that the current flow going through the audio MCLK and I²S is representing a major part of that sleep consumption when VDD is set to 3.3V. Thus, reducing to a lower voltage when possible is recommended, especially if the bus rate is high (depending on audio frequency, number of channels and bits per samples).