This article describes the documentation related to X-CUBE-AI and especially the X-CUBE-AI embedded documentation contents and how to access it. The embedded documentation is installed with X-CUBE-AI, which ensures to provide the accurate documentation for the considered version of X-CUBE-AI.

| File:Info.png |

1. X-CUBE-AI getting started and user guide

There are four main documentation items for X-CUBE-AI completed by WiKi articles:

- Documentation available on st.com:

- The release notes for each version available within X-CUBE-AI

- Embedded documentation available within the X-CUBE-AI Expansion Package and described below.

2. X-CUBE-AI embedded documentation contents

The embedded documentation describes the following topics (for X-CUBE-AI version 7.0.0) in detail:

- User guide

- Installation: specific environment settings to use the command-line interface in a console.

- Command-line interface: the stm32ai application is a console utility, which provides a complete and unified command-line interface (CLI) to generate the X-CUBE-AI optimized library for an STM32 device family from a pre-trained DL/ML model. This section describes the CLI in detail.

- Embedded inference client API: this section describes the embedded inference client API, which must be used by a C application layer (AI client) to use a deployed C model.

- Evaluation report and metrics: this section describes the different metrics (and associated computing flow) that are used to evaluate the performance of the generated C files (or C model), mainly through the validate command.

- Quantized model and quantize command: the X-CUBE-AI code generator can be used to deploy a quantized model (8-bit integer format). Quantization (also called calibration) is an optimization technique to compress a 32-bit floating-point model by reducing its size, by improving the CPU/MCU usage and latency, at the expense of a small degradation of the accuracy. This section describes the way X-CUBE-AI supports quantized models and the CLI internal post-training quantization process.

- Advanced features

- Relocatable binary network support: a relocatable binary model designates a binary object, which can be installed and executed anywhere in an STM32 memory sub-system. It contains a compiled version of the generated NN C files including the requested forward kernel functions and the weights. The principal objective is to provide a flexible way to upgrade an AI-based application without generating and programming the whole end-user firmware again. For example, this is the primary element needed to use the FOTA (Firmware Over-The-Air) technology. This section describes how to build and use a relocatable binary.

- Keras LSTM stateful support: this section describes how X-CUBE-AI provides an initial support for the Keras stateful LSTM.

- Keras Lambda/custom layer support: the goal of this section is to explain how to import a Keras model containing Lambda or custom layers, following one way or another depending on the nature of the model.

- Platform Observer API: for advanced run-time, debug or profiling purposes, an AI client application can register a call-back function to be notified before or/end after the execution of a C node. The call-back can be used to measure the execution time, dump the intermediate values, or both. This section describes how to use and take advantage of this feature.

- STM32 CRC IP as shared resources: to use the network_runtime library, the STM32 CRC IP must be enabled (or clocked); otherwise, the application hangs. To improve the usage of the CRC IP and consider it as a shared resource, two optional specific hooks or call-back functions are defined to facilitate its usage with a resource manager. This section describes how it works.

- TensorFlow™ Lite for Microcontrollers support: the X-CUBE-AI Expansion Package integrates a specific path, which allows to generate a ready-to-use STM32 IDE project embedding a TensorFlow™ Lite for Microcontrollers runtime and its associated TFLite (TensorFlow™ Lite) model. This can be considered as an alternative to the default X-CUBE-AI solution to deploy an AI solution based on a TFLite model. This section describes how X-CUBE-AI supports TensorFlow™ Lite for Microcontrollers.

- How to

- How to use USB-CDC driver for validation: this article explains how to enable the USB-CDC profile to perform faster the validation on the board. A client USB device with the STM32 Communication Device Class (namely the Virtual COM port) is used as communication link with the host. It avoids the overhead of the ST-LINK bridge connecting the UART pins to or from the ST-LINK USB port. However, an STM32 Nucleo or Discovery board with a built-in USB device peripheral is requested.

- How to run locally a C model: this article explains how to run locally the generated C model. The first goal is to enhance an end-user validation process with a large data set including the specific pre- and post-processing functions with the X-CUBE-AI inference run-time. It is also useful to integrate an X-CUBE-AI validation step in a CI/CD/MLOps flow without an STM32 board.

- How to upgrade an STM32 project: this article describes how to upgrade manually or with the CLI an STM32CubeMX-based or proprietary source tree with a new version of the X-CUBE-AI library.

- Supported DL/ML frameworks: lists the supported Deep Learning frameworks, and the operators and layers supported for each of them.

- Keras toolbox: lists the Keras layers (or operators) that can be imported and converted. Keras is supported through the TensorFlow™ backend with channels-last dimension ordering. Keras.io 2.0 up to version 2.5.1 is supported, while networks defined in Keras 1.x are not officially supported. Up to TF Keras 2.5.0 is supported.

- TensorFlow™ Lite toolbox: lists the TensorFlow™ Lite layers (or operators) that can be imported and converted. TensorFlow™ Lite is the format used to deploy a Neural Network model on mobile platforms. STM32Cube.AI imports and converts the .tflite files based on the flatbuffer technology. The official ‘schema.fbs’ definition (tags v2.5.0) is used to import the models. A number of operators from the supported operator list are handled, including the quantized models and/or operators generated by the Quantization Aware Training process, by the post-training quantization process, or both.

- ONNX toolbox: lists the ONNX layers (or operators) that can be imported and converted. ONNX is an open format built to represent Machine Learning models. A subset of operators from Opset 7, 8, 9 and 10 of ONNX 1.6 is supported.

- Frequently asked questions

- Generic aspects

- How to know the version of the Deep Learning framework components used?

- Channel first support for ONNX model

- How is used the CMSIS-NN library?

- What is the EABI used for the network_runtime libraries?

- X-CUBE-AI Python™ API availability?

- Stateful LSTM support?

- How is used the ONNX optimizer?

- How is used the TFLite interpreter?

- TensorFlow™ Keras (tf.keras) vs. Keras.io

- It is possible to update a model on the firmware without having to do a full firmware update?

- Keras model or sequential layer support?

- Is it possible to split the weights buffer?

- Is it possible to place the “activations” buffer in different memory segments?

- How to compress the non-dense/fully-connected layers?

- Is it possible to apply a compression factor different from x8, x4?

- How to specify or indicate a compression factor by layer?

- Why is a small negative ratio reported for the weights size with a model without compression?

- Is it possible to dump/capture the intermediate values during inference execution?

- Validation aspects

- Validation on target vs. validation on desktop

- How to interpret the validation results?

- How to generate npz/npy files from an image data set?

- How to validate a specific network when multiple networks are embedded into the same firmware?

- Reported STM32 results are incoherent

- Unable to perform automatic validation on target

- Long time process or crash with a large test data set

- Quantization and post-training quantization process

- Backward compatibility with X-CUBE-AI 4.0 and X-CUBE-AI 4.1

- Is it possible to use the Keras post-training quantization process through the UI?

- Is it possible to use the Keras post-training quantization process with a non-classifier model?

- Is it possible to use the compression for a quantized model?

- How to apply the Keras post-training quantization process on a non-Keras model?

- TensorFlow™ Lite, OPTIMIZE_FOR_SIZE option support

- Generic aspects

3. X-CUBE-AI embedded documentation access

To access the embedded documentation, first install X-CUBE-AI. The installation process is described in the getting started user manual. Once the installation is done, the documentation is available in the installation directory under X-CUBE-AI/7.0.0/Documentation/index.html (adapt the example, given here for version 7.0.0, to the version used). For Windows®, by default, the documentation is located here (replace the string username by your Windows® username): file:///C:/Users/username/STM32Cube/Repository/Packs/STMicroelectronics/X-CUBE-AI/7.0.0/Documentation/index.html. The release notes are available here: file:///C:/Users/username/STM32Cube/Repository/Packs/STMicroelectronics/X-CUBE-AI/7.0.0/Release_Notes.html.

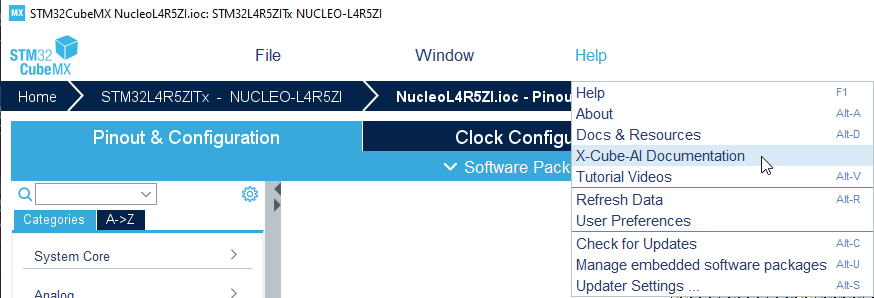

The embedded documentation can also be accessed through the STM32CubeMX UI, once the X-CUBE-AI Expansion Package has been selected and loaded, by clicking on the "Help" menu and then on "X-CUBE-AI documentation":