This article shows how to use the Teachable Machine online tool with STM32Cube.AI through the ST Edge AI model zoo to create an image classifier running on the STM32H747I-DISCO Discovery kit. This document is focused on AI for STM32 MCU family. It is not related to AI with other family of product of ST.

This tutorial is divided into two parts:

- The first part shows how to use the Teachable Machine tool to train and export a deep learning model.

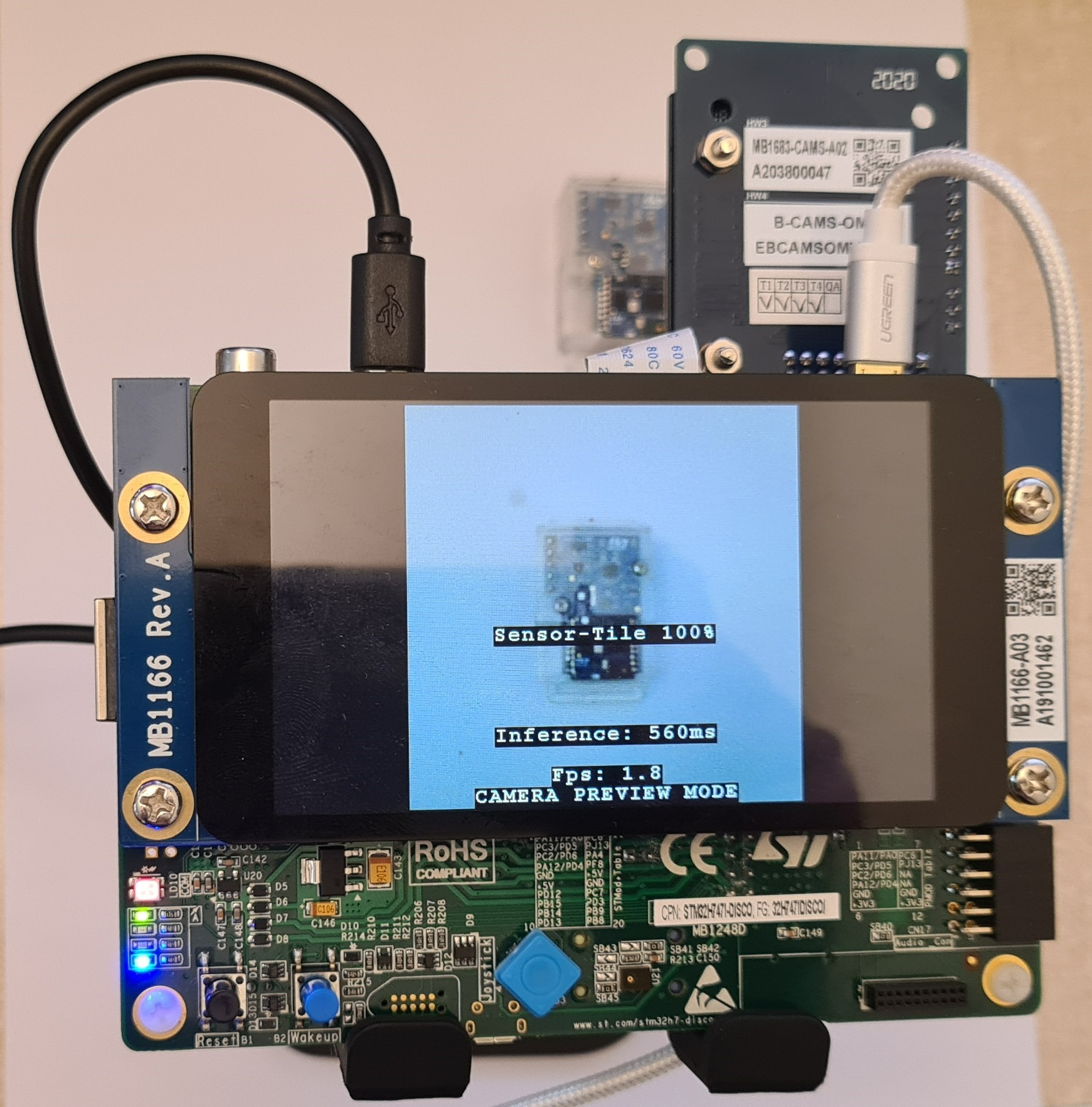

- The second part explains how to deploy this new model using the ST Edge AI model zoo and perform live inference on an STM32 board with a camera.

The whole process is described in the figure below:

1. Prerequisites

1.1. Hardware

- STM32H747I-DISCO Discovery kit

- B-CAMS-OMV flexible camera adapter board

- A USB Type-A or USB Type-C® to Micro-B cable (a second one is optional)

- Optional: a webcam

1.2. Software

- STM32CubeIDE

- X-CUBE-AI (STM32Cube.AI)

- STM32CubeProgrammer (STM32CubeProg)

- ST Edge AI model zoo Version 2

2. Training a model using Teachable Machine

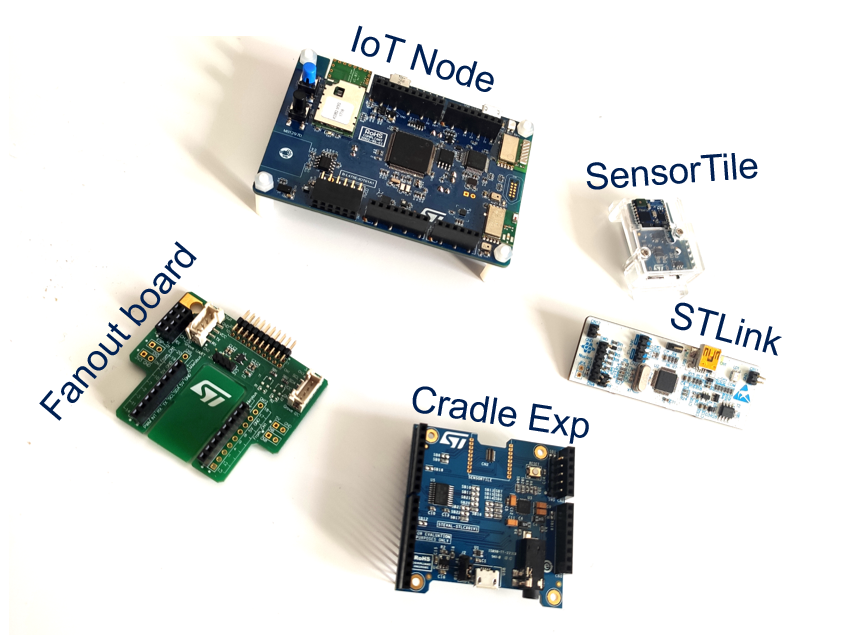

In this section, we train a deep neural network in the browser using Teachable Machine. We first need to choose something to classify. In this example, we decide to classify ST boards and modules. The chosen boards are shown in the figure below:

You can choose whatever object you want to classify: fruits, pasta, animals, people, and others.

To get started, open https://teachablemachine.withgoogle.com/, preferably from the Chrome browser.

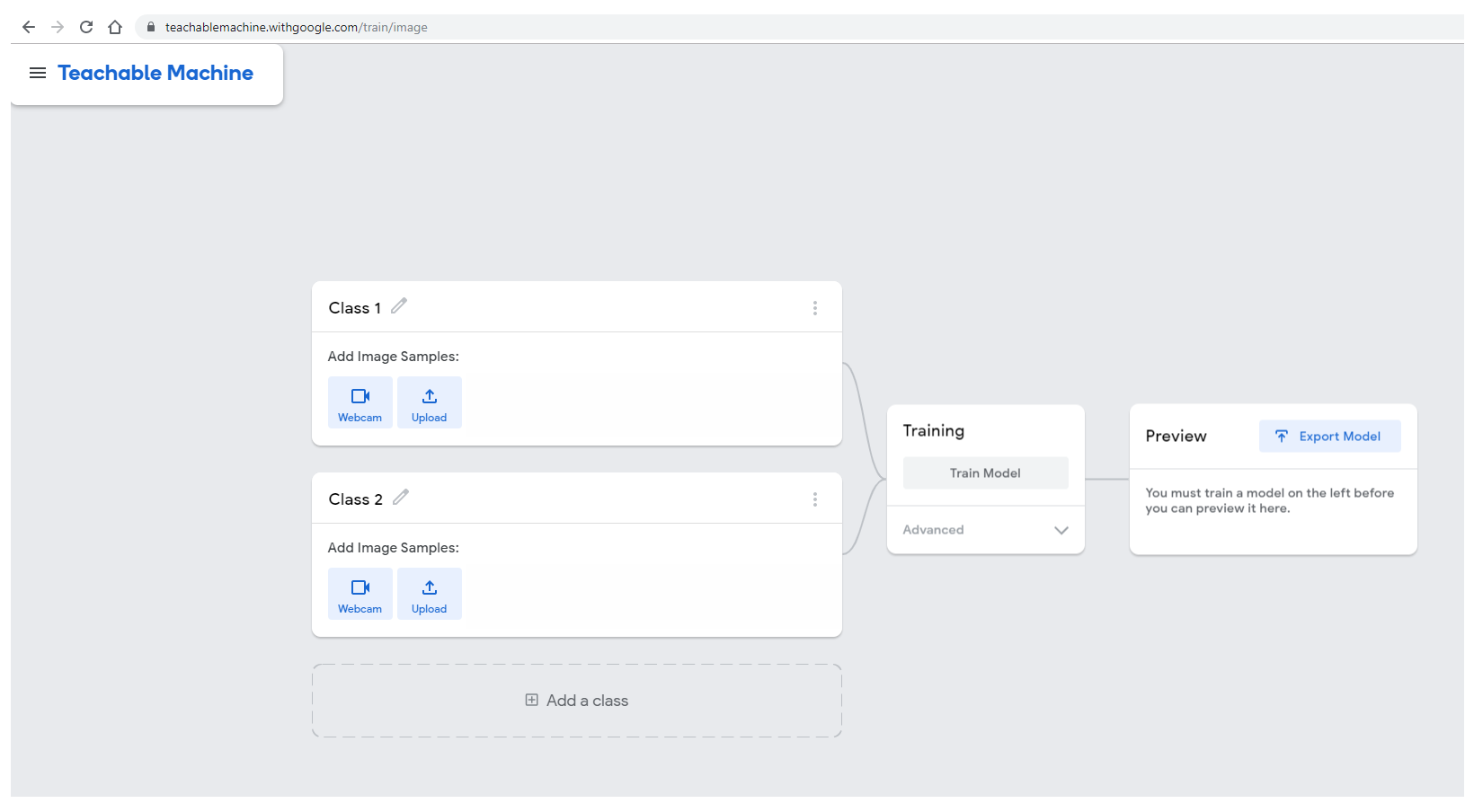

After clicking on Get started, select Image Project, and then Standard image model (224x244px color images). You are then presented with the following interface.

2.1. Adding training data

For each category that you want to classify, edit the class name by clicking on the pencil icon. In this example, we choose to start with SensorTile.

To add images with your webcam, click on the webcam icon and record some images. If you have image files on your computer, click on upload and select the directory containing your images.

The STM32H747 Discovery kit combined with the B-CAMS-OMV camera daughter board can be used as a USB webcam. Using the ST kit for data collection helps to obtain better results as the same camera is used for data collection and inference once the model has been trained.

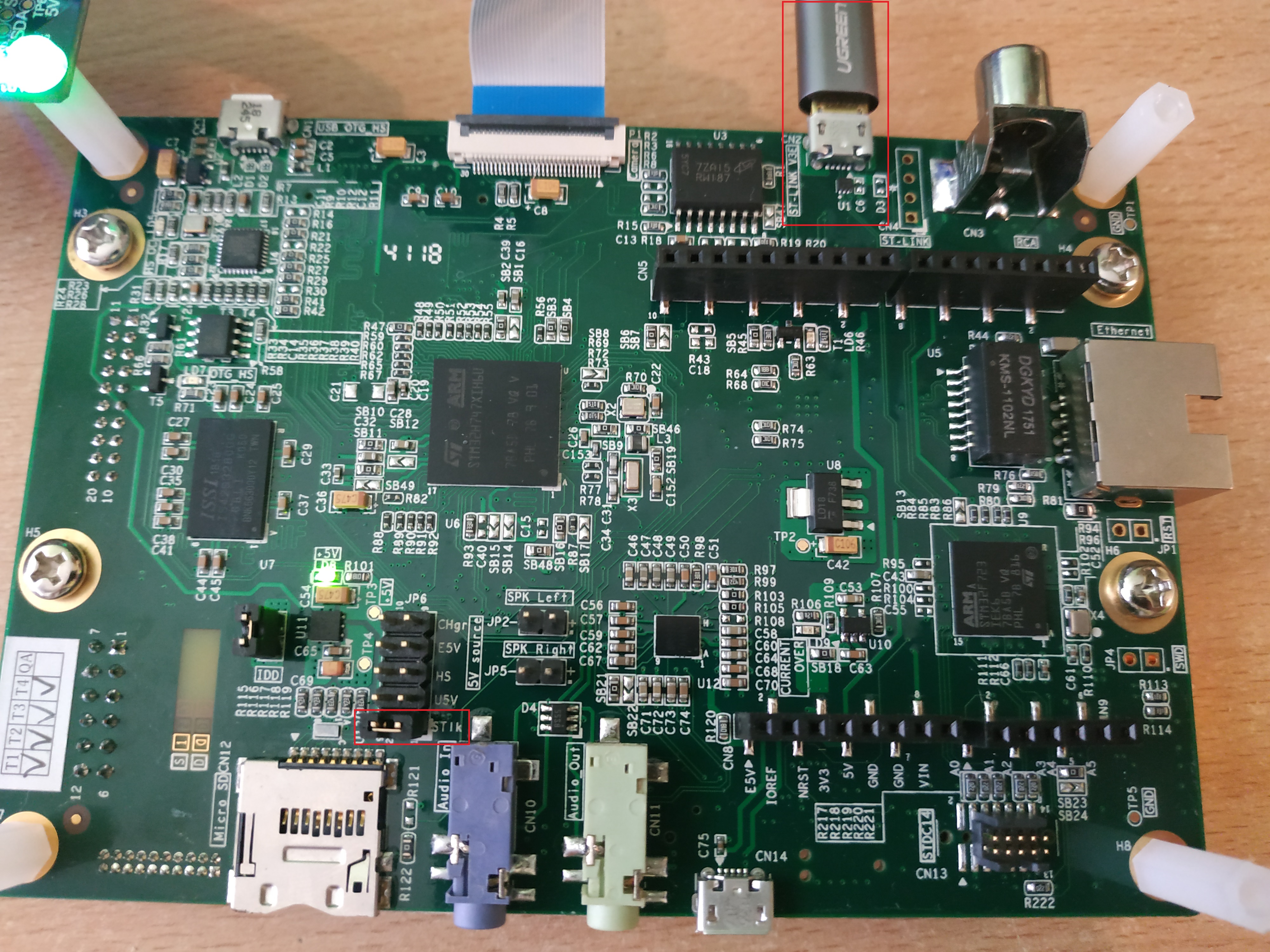

To use the ST kit as a webcam, simply program it with the binary from the STMicroelectronics GitHub repository. First, plug your board onto your computer using the ST-LINK port. Make sure that the JP6 jumper is set to STlk.

After plugging the USB cable onto your computer, the board appears as a mounted device.

Clone the github project through USB webcam application. It contains a full USB WebCam application project.

A precompiled binary is located under x-cube-wecam/Binary/STM32H747I-DISCO_Webcam.bin.

Drag and drop the binary file onto the board mounted device. This programs the binary on the board.

Unplug the board, change the JP6 jumper to the HS position, and plug your board using the USB OTG port.

For convenience, you can simply plug two USB cables, one on the USB OTG port, the other one on the USB ST-LINK and set the JP6 jumper to STlk. In this case, the board can be programmed and switched from USB webcam mode to test mode without the need to change the jumper position.

Depending on how you oriented the camera board, you might prefer to flip the image. If you do so, you must use the same option when generating the code on the STM32.

Once you have a satisfactory amount of images for this class, repeat the process for the next one until your dataset is complete. Take a note of the class order used to train your model since you must apply the corresponding names in the same order during the deployment.

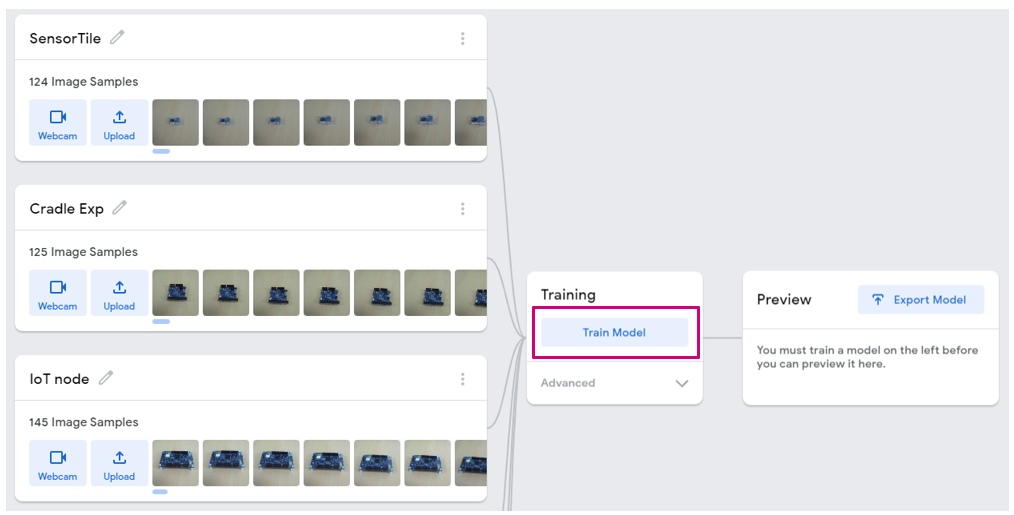

2.2. Training the model

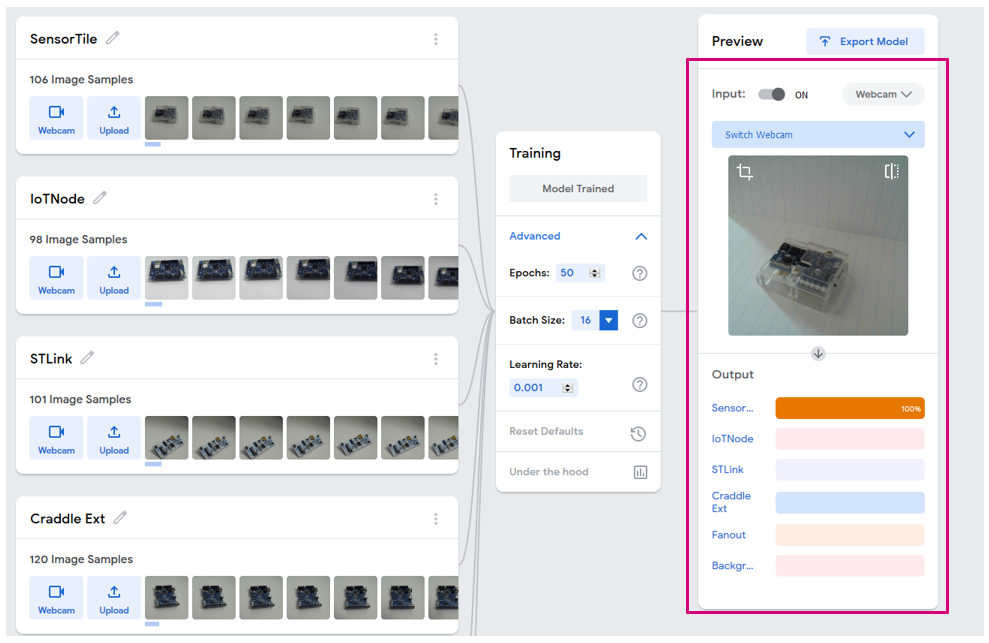

Once a sufficient amount of data is collected, it is time to train a deep learning model for classifying the different objects. To do this, click on the Train Model button as shown below:

This process can take a while, depending on your amount of data. To monitor the training progress, you can select Advanced and click on Under the hood. A side panel displays the training metrics.

When the training is complete, you can see the predictions of your network on the "Preview" panel. You can either choose a webcam input or an imported file.

2.2.1. What happens under the hood (for the curious ones)

Teachable Machine is based on Tensorflow.js to allow neural network training and inference in the browser. However, as image classification is a task that requires a lot of training time, Teachable Machine uses a technique called transfer learning: The webpage downloads a MobileNetV2 model that was previously trained on a large image dataset of 1000 categories. The convolution layers of this pretrained model extract features efficiently so that they do not need to be trained again. Only the last layers of the neural network are trained using Tensorflow.js, thus saving a lot of time.

2.3. Exporting the model

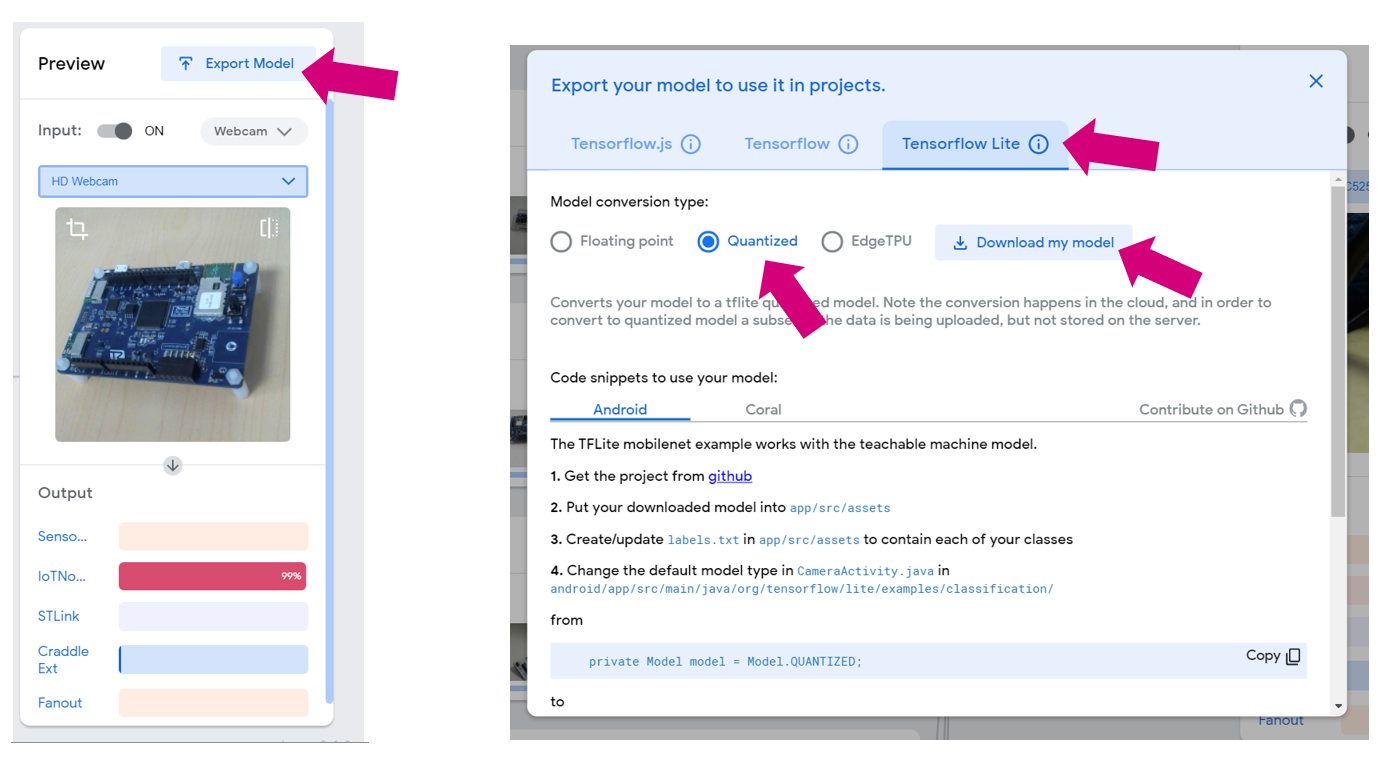

If you are satisfied with your model, it is time to export it. To do so, click the Export Model button. In the pop-up window, select Tensorflow Lite, check Quantized, and click on Download my model.

Since the model conversion is done in the ST Edge AI Developer Cloud, this step can take a few minutes.

Your browser downloads a zip file containing the model as a .tflite file and a .txt file containing your label. Extract these two files in an empty directory, which is called path_to_the_model in the rest of this tutorial.

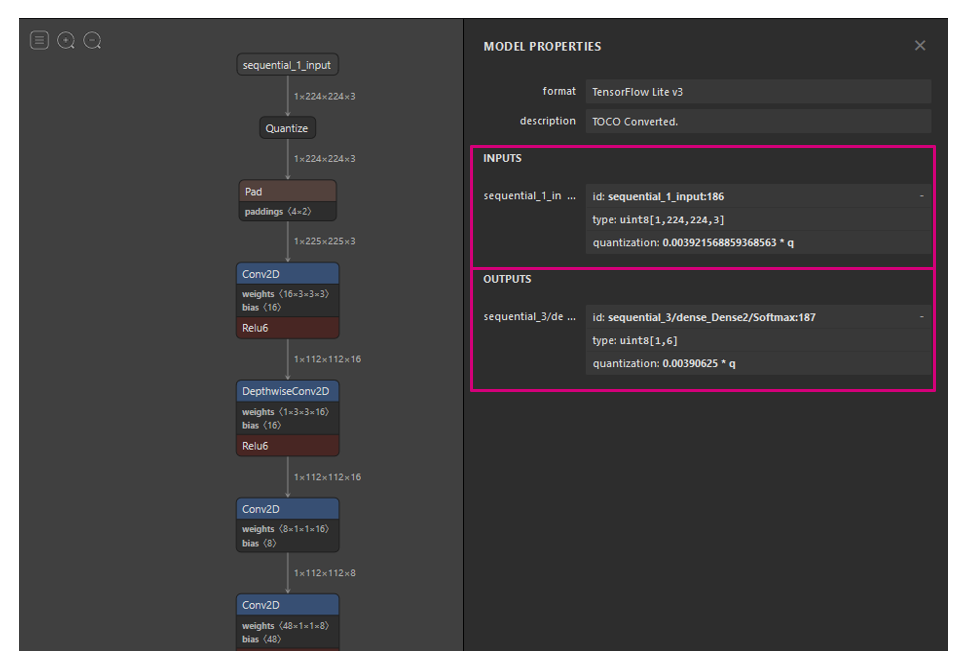

2.3.1. Inspecting the model using Netron (optional)

It is always interesting to take a look at a model architecture as well as at its input and output formats and shapes. To do this, use the Netron webapp.

Visit https://lutzroeder.github.io/netron/ and select Open model, then choose the model.tflite file from Teachable Machine. Click on sequental_1_input: it shows that the input is of type uint8 and of size [1, 244, 244, 3]. Now for the outputs: in this example, we have 6 classes, and we can see that the output shape is [1,6]. The quantization parameters are also reported. Refer to part 3 for how to use them.

3. Porting to a target STM32H747I-DISCO

In this chapter, the user deploys the application on target through the ST Edge AI model zoo.

The ST Edge AI model zoo is a collection of pretrained machine learning models and a set of tools that aims at enabling the users to easily implement AI on STM32 MCUs. It is available through GitHub at https://github.com/STMicroelectronics/stm32ai-modelzoo.

Download the ST Edge AI model zoo. The path to your installation is referenced by %MODEL_ZOO_DIR%. In the root of %MODEL_ZOO_DIR%, the README.MD file extensively describes the structure of the zoo. For the purpose of the current deployment on STM32, we will focus on the bare minimum for this activity.

The zoo is written in the Python™ programming language, compatible with its version 3.9 or 3.10. Download and Install Python. It also requires a set of additional Python™ packages enumerated in the file "requirements.txt".

pip install -r requirements.txt

The zoo is organized by AI use cases. With Teachable Machine, we have trained a model for image classification.

cd %MODEL_ZOO_DIR%\image_classification.

In this directory, you have access to various image classification models and to scripts to manipulate them. The scripts are located in the src directory

cd src

The main entry point for image classification is stm32ai_main.py. The actions executed by the script are described in a configuration file. There are many configuration file examples in the config_file_examples directory.

To deploy the model on the board, select the config_file_examples/deployment_config.yaml file and edit it.

For this activity the ST Edge AI model zoo performs a succession of actions on your behalf:

- Transform your quantized Model to C Code that efficiently runs on STM32

- Integrate this library into an already existing image classification application

- Compile the binary and generate the firmware for the STM32H747I-DISCO

- Program the board

- Run the application

The configuration of the deployment of the ST Edge AI model zoo is performed by editing the script config_file_examples/deployment_config.yaml in this directory. The README.MD file describes all the possible configurations in details. For the purpose of this documentation we consider only the required changes.

3.1. Configuring the conversion from model description to C Code

There are two ways to convert a TensorFlow™ Lite model to optimized C code for STM32 usage:

- ST Edge AI Developer Cloud: an online platform and services allowing the creation, optimization, benchmarking, and generation of AI for the STM32 microcontrollers.

- STM32Cube X-CUBE-AI package: An STM32CubeMX package similar to ST Edge AI Developer Cloud but offline and integrated into STM32CubeMX.

3.1.1. ST Edge AI Developer Cloud

In the config_file_examples/deployment_config.yaml configuration file in the keys tools/stm32ai, the key on_cloud is set to true.

tools:

stm32ai:

version: 8.1.0

optimization: balanced

on_cloud: True

Prepare your ST website credentials. You are asked for them during the deployment using the ST Edge AI Developer Cloud.

3.1.2. X-CUBE-AI

Alternatively to the ST Edge AI Developer Cloud, a local installation of X-CUBE-AI can be used. The X-CUBE-AI is installed from STM32CubeMX by default. in a Windows® environment, it is therefore located into:

- C:/Users\<USERNAME>/STM32Cube/Repository

If we assume that we are using its version 8.1.0, the base directory is:

- C:/Users/<USERNAME>/STM32Cube/Repository/Packs/STMicroelectronics/X-CUBE-AI/8.1.0

Inside the package, since we are running on a Microsoft® Windows® computer, the path to the STM32Cube.AI executable is:

- C:/Users/<USERNAME>/STM32Cube/Repository/Packs/STMicroelectronics/X-CUBE-AI/8.1.0/Utilities/windows/stm32ai.exe

For X-CUBE-AI version 9.0 and above, the executable is named stedgeai.exe instead of stm32ai.exe, so the path to the executable is:

- C:/Users/<USERNAME>/STM32Cube/Repository/Packs/STMicroelectronics/X-CUBE-AI/9.0.0/Utilities/windows/stedgeai.exe

Inside the ST Edge AI model zoo, in the config_file_examples/deployment_config.yaml file, move the value of STM32AI_PATH in the stm32ai key:

tools:

stm32ai:

version: 8.1.0

optimization: balanced

on_cloud: False

path_to_stm32ai: C:/Users/<USERNAME>/STM32Cube/Repository/Packs/STMicroelectronics/X-CUBE-AI/<*.*.*>/Utilities/windows/stm32ai.exe

3.2. Compiler configuration

Whatever alternative you choose for the conversion of the AI model to C code, this is meant to be compiled. The ST Edge AI model zoo requires STM32CubeIDE to build the application for the board. The key path_to_stm32ai must be set for the this purpose:

- On Microsoft® Windows®, the path looks like: C:/ST/STM32CubeIDE_X.XX.X/STM32CubeIDE/stm32cubeide.exe, where X stands for the version number of the IDE

- On Linux®, the typical path is: /opt/st/STM32CubeIDE_X.XX.X/stm32cubeide

tools:

...

path_to_cubeIDE: C:/ST/STM32CubeIDE_1.15.1/STM32CubeIDE/stm32cubeide.exe

3.3. Using your own model

Again in the config_file_examples/deployment_config.yaml file, in the next section, fill in the path of the model that you exported from Teachable Machine.

general:

model_path: path_to_the_model

3.4. Naming the class for the application display

Still in config_file_examples/deployment_config.yaml, update this key with your own class names:

dataset:

class_names: [SensorTile, IoTNode, STLink, Craddle_Ext, Fanout, Background]

3.5. Cropping the image

Teachable Machine crops the webcam images to fit the model input size. During the deployment of the ST Edge AI model zoo application, by default, the image is resized to the model input size, hence losing the aspect ratio. We must change this default behavior and make sure that the camera images are cropped. Still in config_file_examples/deployment_config.yaml, update this key:

preprocessing:

rescaling:

scale: 1/127.5

offset: -1

resizing:

interpolation: nearest

aspect_ratio: crop

color_mode: rgb

3.6. Deployment

To deploy your new model on target, the ST Edge AI model zoo must program the board. First, plug your board onto your computer using the ST-LINK port. Make sure that the JP6 jumper is set to STlk.

Then, to start the deployment, enter:

$> python stm32ai_main.py --config-path ./config_file_examples/ --config-name deployment_config.yaml

The expected output is :

[INFO] : Running `deployment` operation mode

The random seed for this simulation is 123

[INFO] : Generating C header file for Getting Started...

[I] loading model.. model_path="C:/Users/XXX/my_tree/project/teachable_machine/model/model.tflite"

[I] loading conf file.. "../../stm32ai_application_code/image_classification/stmaic_STM32H747I-DISCO.conf" config=""

[I] "cm7.release" configuration is used

[INFO] : Selected board : "STM32H747I-DISCO Getting Started Image Classification (STM32CubeIDE)" (stm32_cube_ide/cm7.release/stm32h7)

[INFO] : Compiling the model and generating optimized C code + Lib/Inc files: C:/Users/XXX/my_tree/project/teachable_machine/model/model.tflite

[INFO] : Establishing a connection to STM32Cube.AI Developer Cloud to launch the model benchmark on STM32 target...

[INFO] : To create an account, go to https://stm32ai-cs.st.com/home. Enter your credentials:

Username: username@domain.com

Password: **********

[INFO] : Successfully logged

[INFO] : Starting the model memory footprints estimation...

[INFO] : STM32Cube.AI version 8.1.0 used.

[I] setting STM.AI tools.. root_dir="", req_version=""

[INFO] Offline CubeAI used; Selected tools: 8.1.0 (x-cube-ai pack)

[INFO]: Dispatch activations in different ram pools to fit the large model

[I] compiling.. "model_tflite" session

[I] model_path : ['C:/Users/XXX/my_tree/project/teachable_machine/model/model.tflite']

[I] tools : 8.1.0 (x-cube-ai pack)

[I] target : "STM32H747I-DISCO Getting Started Image Classification (STM32CubeIDE)" (stm32_cube_ide/cm7.release/stm32h7)

[I] options : ../../stm32ai_application_code/image_classification/mempools_STM32H747I-DISCO.json

[I] results -> RAM=703,660 IO=0:0 WEIGHTS=539,024 MACC=58,587,516 RT_RAM=34,068 RT_FLASH=113,488 LATENCY=0.000

[INFO]: Weights fit in internal flash

[INFO] : Optimized C code + Lib/Inc files generation done.

[INFO] : Building the STM32 c-project..

[I] deploying the c-project.. "STM32H747I-DISCO Getting Started Image Classification (STM32CubeIDE)" (stm32_cube_ide/cm7.release/stm32h7)

[I] updating.. cm7.release

[I] -> s:copying file.. "network_config.h" to ..\..\stm32ai_application_code\image_classification\Application\Network\Inc\network_config.h

[I] -> s:copying file.. "network.h" to ..\..\stm32ai_application_code\image_classification\Application\Network\Inc\network.h

[I] -> s:copying file.. "network.c" to ..\..\stm32ai_application_code\image_classification\Application\Network\Src\network.c

[I] -> s:copying file.. "network_data.h" to ..\..\stm32ai_application_code\image_classification\Application\Network\Inc\network_data.h

[I] -> s:copying file.. "network_data.c" to ..\..\stm32ai_application_code\image_classification\Application\Network\Src\network_data.c

[I] -> s:copying file.. "network_data_params.h" to ..\..\stm32ai_application_code\image_classification\Application\Network\Inc\network_data_params.h

[I] -> s:copying file.. "network_data_params.c" to ..\..\stm32ai_application_code\image_classification\Application\Network\Src\network_data_params.c

[I] -> s:copying file.. "network_generate_report.txt" to ..\..\stm32ai_application_code\image_classification\Application\Network\Src\network_generate_report.txt

[I] -> s:removing dir.. ..\..\stm32ai_application_code\image_classification\Middlewares\ST\STM32_AI_Runtime\Lib

[I] -> s:copying dir.. "Lib" to ..\..\stm32ai_application_code\image_classification\Middlewares\ST\STM32_AI_Runtime\Lib

[I] -> s:removing dir.. ..\..\stm32ai_application_code\image_classification\Middlewares\ST\STM32_AI_Runtime\Inc

[I] -> s:copying dir.. "Inc" to ..\..\stm32ai_application_code\image_classification\Middlewares\ST\STM32_AI_Runtime\Inc

[I] -> u:copying file.. "ai_model_config.h" to ..\..\stm32ai_application_code\image_classification\Application\STM32H747I-DISCO\Inc\CM7\ai_model_config.h

[I] -> updating cproject file "C:\Users\XXX\my_tree\project\teachable_machine\github\modelzoo-V2-github\stm32ai_application_code\image_classification\Application\STM32H747I-DISCO\STM32CubeIDE\CM7" with "NetworkRuntime810_CM7_GCC.a"

[I] building.. cm7.release

[I] flashing.. cm7.release ['STM32H747I-DISCO', 'DISCO-H747XI']

[INFO] : Deployment complete.

Your application is ready for you to experiment with.

4. STMicroelectronics references

See also: