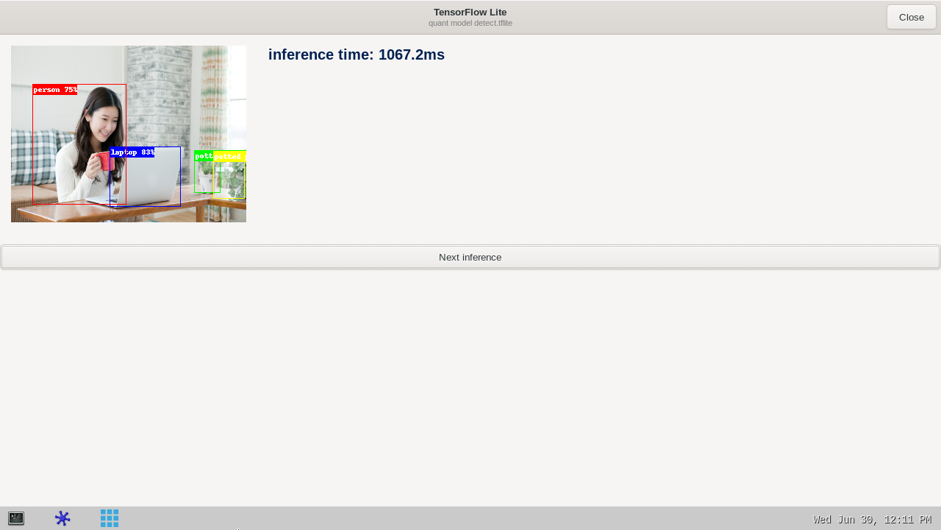

This article explains how to experiment with TensorFlow Lite[1] applications for object detection based on the COCO SSD MobileNet v1 model using TensorFlow Lite Python runtime.

1. Description[edit source]

The object detection[2] neural network model allows identification and localization of a known object within an image.

The application enables OpenCV camera streaming (or test data picture) and the TensorFlow Lite[1] interpreter runing the NN inference based on the camera (or test data pictures) inputs.

It is a multi-process Python application that allows the camera preview (on the CPU core 0) and the neural network inference (using the CPU core 1) to run in parallel .

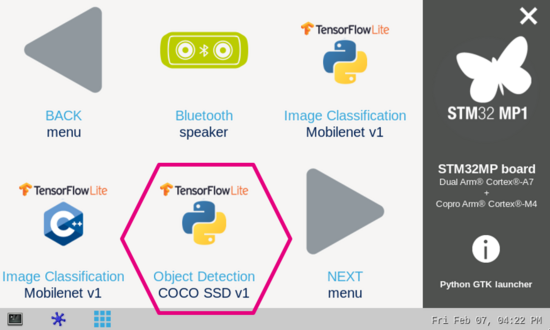

The user interface is implemented using Python GTK.

The model used with this application is the COCO SSD MobileNet v1 downloaded from the TensorFlow Lite object detection overview page[2].

2. Installation[edit source]

2.1. Install from the OpenSTLinux AI package repository[edit source]

After having configured the AI OpenSTLinux package you can install the X-LINUX-AI components for this application:

apt-get install tflite-cv-apps-object-detection-python

Then restart the demo launcher:

systemctl restart weston@root

2.2. Source code location[edit source]

The objdetect_tfl_multiprocessing.py Python script is available:

- in the Openembedded OpenSTLinux Distribution with X-LINUX-AI Expansion Package:

- <Distribution Package installation directory>/layers/meta-st/meta-st-stm32mpu-ai/recipes-samples/tflite-cv-apps/files/object-detection/python/objdetect_tfl_multiprocessing.py

- on the target:

- /usr/local/demo-ai/computer-vision/tflite-object-detection/python/objdetect_tfl_multiprocessing.py

- on GitHub:

3. How to use the application[edit source]

3.1. Launching via the demo launcher[edit source]

3.2. Executing with the command line[edit source]

The Python script objdetect_tfl_multiprocessing.py application is located in the userfs partition:

/usr/local/demo-ai/computer-vision/tflite-object-detection/python/objdetect_tfl_multiprocessing.py

It accepts the following input parameters:

usage: objdetect_tfl_multiprocessing.py [-h] [-i IMAGE] [-v VIDEO_DEVICE] [--frame_width FRAME_WIDTH] [--frame_height FRAME_HEIGHT]

[--framerate FRAMERATE] [-m MODEL_FILE] [-l LABEL_FILE] [--input_mean INPUT_MEAN]

[--input_std INPUT_STD]

optional arguments:

-h, --help show this help message and exit

-i IMAGE, --image IMAGE

image directory with image to be classified

-v VIDEO_DEVICE, --video_device VIDEO_DEVICE

video device (default /dev/video0)

--frame_width FRAME_WIDTH

width of the camera frame (default is 320)

--frame_height FRAME_HEIGHT

height of the camera frame (default is 240)

--framerate FRAMERATE

framerate of the camera (default is 15fps)

-m MODEL_FILE, --model_file MODEL_FILE

.tflite model to be executed

-l LABEL_FILE, --label_file LABEL_FILE

name of file containing labels

--input_mean INPUT_MEAN

input mean

--input_std INPUT_STD

input standard deviation

3.3. Testing with COCO ssd MobileNet v1[edit source]

The model used for test is the detect.tflite downloaded from object detection overview[2]

To ease launching of the Python script, two shell scripts are available:

- launch object detection based on camera frame inputs

/usr/local/demo-ai/computer-vision/tflite-object-detection/python/launch_python_objdetect_tfl_coco_ssd_mobilenet.sh

- launch object detection based on the pictures located in /usr/local/demo-ai/computer-vision/models/coco_ssd_mobilenet/testdata directory

/usr/local/demo-ai/computer-vision/tflite-object-detection/python/launch_python_objdetect_tfl_coco_ssd_mobilenet_testdata.sh

4. References[edit source]